Operational Considerations

A Bacula administrator must be aware that a verification against the

source files uses resources on the client system – files are searched

for using essentially the same process as during a backup, and when

checksumming is done (Signature in the File Set options being

set), these files are also entirely read from disk and the checksum is

generated, which uses considerable CPU power.

The needed network interaction between director (dir) and file daemon (fd) is usually not that critical.

To do all the configured checks, the catalog database will be required. The queries it has to process for verify jobs are usually not more challenging than running backup and restore jobs.

Bacula Systems, for the reasons outlined, recommends to run CatalogToDisk verifications out of the business hours of the backed up machine (if possible, run them during the backup window), and to only run them for jobs where the additional effort is really required. It also may not be necessary to verify each single job – in many cases, only verifying the (monthly) full backups or jobs that are going to be used for long-term archival may be sufficient.

If you want to ensure that both data integrity verifications – catalog to disk and to volume – happen close to each other, several approaches can be used.

The one that integrates most easily with normal Bacula operations is a schedule to run those jobs, possibly scheduling to the same time the backup job runs, but using a lower priority setting.

Alternatively, it would seem possible to explicitly link the jobs using

the Run directive in the backup job. This, unfortunately, will not

give the desired result as jobs started like this are run before the

original job, which would verify against an older backup. The solution

seems to be using Run Scripts and use Console commands to

start the desired jobs. Again, this does not work, as the run

command is not allowed in Run Script sub-resources.

So, the most reasonable way is to use a simple shell script to be called by Bacula after the backup job finishes, which starts the two verify jobs.

Adding a Run Script to the Backup Job

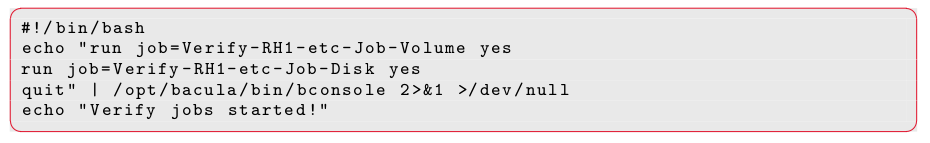

Script to Execute Jobs through bconsole

In the DIRs configuration, you would change the job configuration to the one shown in figure Adding a Run Script to the Backup Job, and the referenced script could be something as simple as the one shown in figure Script to Execute Jobs through bconsole.

If using Bacula’s integrated scheduler is not desired, the script

mentioned above could also be used to trigger the needed jobs through

cron or any other job scheduler.

The solution we now have verifies both “ends” of a backup job shortly after the original backup was run – delays may happen when other jobs block the needed resources for the Verify Jobs, or when controlling the job execution order through priorities to ensure all backups run as quickly as possible, making best use of the available backup window.

We have seen that the Verify Jobs we set up detect changes both on the backup media (ensuring volume integrity) and on the original file system (ensuring data integrity). This sounds like we’ve already implemented some Tripwire-like functionality 8 to detect tampering of critical files. Why this is not the case, and how to better implement that functionality is discussed in the next chapter.

- 8

Tripwire is a well-known data integrity tool available both as open-source or commercial software. See www.tripwire.org for more information.

Go back to the Disk to Catalog Verification chaper.