Features in Bacula Community

CommunityThis chapter presents the new features that have been added to the previous versions of Bacula Community.

New Features in 11.0.0

Catalog Performance Improvements

There is a new Bacula database format (schema) in this version of Bacula that eliminates the FileName table by placing the Filename into the File record of the File table. This substantially improves performance, particularly for large databases.

The update_xxx_catalog script will automatically update the Bacula database format, but you should realize that for very large databases (greater than 50GB), it may take some time and it will double the size of the database on disk during the migration.

This database format change can provide very significant improvements in the speed of metadata insertion into the database, and in some cases (backup of large email servers) can significantly reduce the size of the database.

Automatic TLS Encryption

Starting with Bacula 11.0.6, all daemons and consoles are now using TLS automatically for all network communications. It is no longer required to setup TLS keys in advance. It is possible to turn off automatic TLS PSK encryption using the TLS PSK Enable directive.

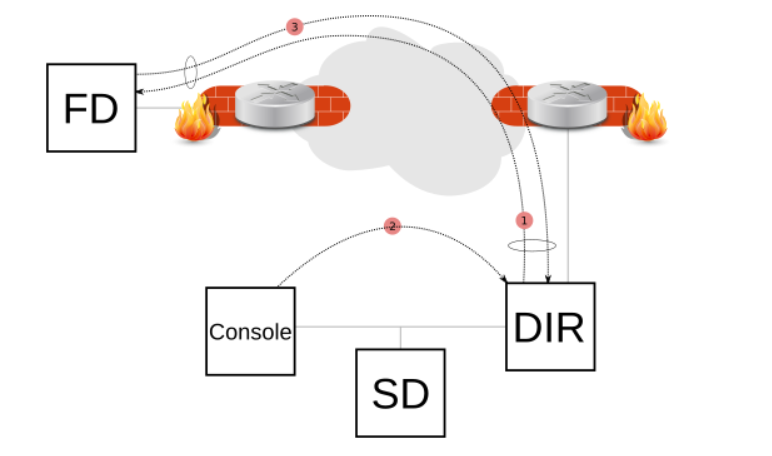

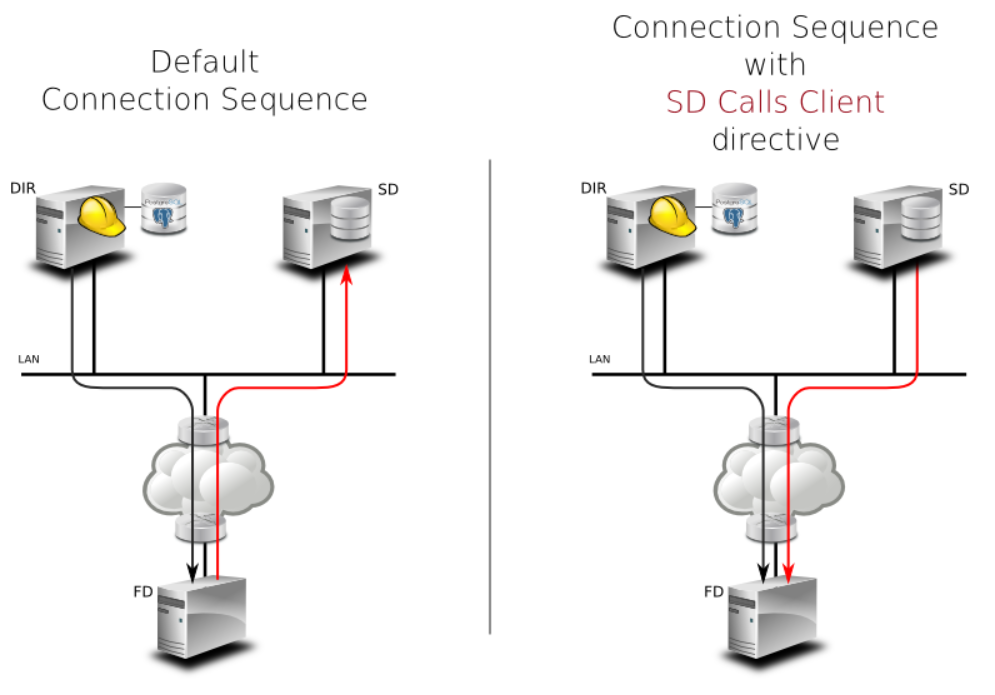

Client Behind NAT Support with the Connect To Director Directive

A Client can now initiate a connection to the Director (permanently or scheduled) to allow the Director to communicate to the Client when a new Job is started or a bconsole command such as status client or estimate is issued.

This new network configuration option is particularly useful for Clients that are not directly reachable by the Director.

# cat /opt/bacula/etc/bacula-fd.conf

Director {

Name = bac-dir

Password = aigh3wu7oothieb4geeph3noo # Password used to connect

# New directives

Address = bac-dir.mycompany.com # Director address to connect

Connect To Director = yes # FD will call the Director

}

# cat /opt/bacula/etc/bacula-dir.conf

Client {

Name = bac-fd

Password = aigh3wu7oothieb4geeph3noo

# New directive

Allow FD Connections = yes

}

It is possible to schedule the Client connection at certain periods of the day:

# cat /opt/bacula/etc/bacula-fd.conf

Director {

Name = bac-dir

Password = aigh3wu7oothieb4geeph3noo # Password used to connect

# New directives

Address = bac-dir.mycompany.com # Director address to connect

Connect To Director = yes # FD will call the Director

Schedule = WorkingHours

}

Schedule {

Name = WorkingHours

# Connect the Director between 12:00 and 14:00

Connect = MaxConnectTime=2h on mon-fri at 12:00

}

Note that in the current version, if the File Daemon is started after 12:00, the next connection to the Director will occur at 12:00 the next day.

A Job can be scheduled in the Director around 12:00, and if the Client is connected, the Job will be executed as if the Client was reachable from the Director.

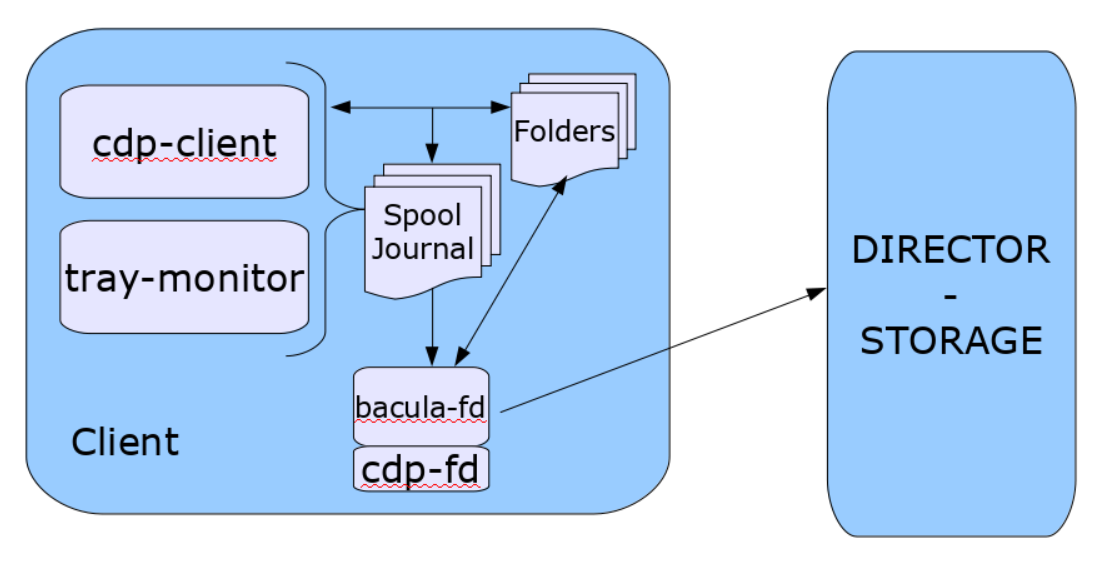

Continuous Data Protection Plugin

Continuous Data Protection (CDP), also called continuous backup or real-time backup, refers to backup of Client data by automatically saving a copy of every change made to that data, essentially capturing every version of the data that the user saves. It allows the user or administrator to restore data to any point in time.

The Bacula CDP feature is composed of two components: An application (cdp-client or tray-monitor) that will monitor a set of directories configured by the user, and a Bacula FileDaemon plugin responsible to secure the data using Bacula infrastructure.

The user application (cdp-client or tray-monitor) is responsible for monitoring files and directories. When a modification is detected, the new data is copied into a spool directory. At a regular interval, a Bacula backup job will contact the FileDaemon and will save all the files archived by the cdp-client. The locally copied data can be restored at any time without a network connection to the Director.

See the CDP (Continuous Data Protection) chapter for more information.

Global Autoprune Control Directive

The Director Autoprune directive can now globally control the Autoprune feature. This directive will take precedence over Pool or Client Autoprune directives.

Director {

Name = mydir-dir

...

AutoPrune = no # switch off Autoprune globally

}

Event and Auditing

The Director daemon can now record events such as:

Console connection/disconnection

Daemon startup/shutdown

Command execution

…

The events may be stored in a new catalog table, to disk, or sent via syslog.

Messages {

Name = Standard

catalog = all, events

append = /opt/bacula/working/bacula.log = all, !skipped

append = /opt/bacula/working/audit.log = events, !events.bweb

}

Messages {

Name = Daemon

catalog = all, events

append = /opt/bacula/working/bacula.log = all, !skipped

append = /opt/bacula/working/audit.log = events, !events.bweb

append = /opt/bacula/working/bweb.log = events.bweb

}

The new message category “events” is not included in the default configuration files by default.

It is possible to filter out some events using “!events.” form. It is possible to specify 10 custom events per Messages resource.

All event types are recorded by default.

When stored in the catalog, the events can be listed with the “list events” command.

* list events [type=<str> | limit=<int> | order=<asc|desc> | days=<int> |

start=<time-specification> | end=<time-specification>]

+---------------------+------------+-----------+--------------------------------+

| time | type | source | event |

+---------------------+------------+-----------+--------------------------------+

| 2020-04-24 17:04:07 | daemon | *Daemon* | Director startup |

| 2020-04-24 17:04:12 | connection | *Console* | Connection from 127.0.0.1:8101 |

| 2020-04-24 17:04:20 | command | *Console* | purge jobid=1 |

+---------------------+------------+-----------+--------------------------------+

The .events command can be used to record an external event. The source recorded will be recorded as “source”. The events type can have a custom name.

* .events type=baculum source=joe text="User login"

New Prune Command Option

The prune jobs all command will query the catalog to find all combinations of Client/Pool, and will run the pruning algorithm on each of them. At the end, all files and jobs not needed for restore that have passed the relevant retention times will be pruned.

The prune command prune jobs all yes can be scheduled in a RunScript to prune the catalog once per day for example. All Clients and Pools will be analyzed automatically.

Job {

...

RunScript {

Console = "prune jobs all yes"

RunsWhen = Before

failjobonerror = no

runsonclient = no

}

}

Dynamic Client Address Directive

It is now possible to use a script to determine the address of a Client when dynamic DNS option is not a viable solution:

Client {

Name = my-fd

...

Address = "|/opt/bacula/bin/compute-ip my-fd"

}

The command used to generate the address should return one single line with a valid address and end with the exit code 0. An example would be:

Address = "|echo 127.0.0.1"

This option might be useful in some complex cluster environments.

Volume Retention Enhancements

The Pool/Volume parameter Volume Retention can now be disabled to never prune a volume based on the Volume Retention time. When Volume Retention is disabled, only the Job Retention time will be used to prune jobs.

Pool {

Volume Retention = 0

...

}

Windows Enhancements

Support for Windows files with non-UTF16 names.

Snapshot management has been improved, and a backup job now relies exclusively on the snapshot tree structure.

Support for the system.cifs_acl extended attribute backup with Linux CIFS has been added. It can be used to backup Windows security attributes from a CIFS share mounted on a Linux system. Note that only recent Linux kernels can handle the system.cifs_acl feature correctly. The FileSet must use the XATTR Support=yes option, and the CIFS share must be mounted with the cifsacl options. See mount.cifs(8) for more information.

GPFS ACL Support

The new Bacula FileDaemon supports the GPFS filesystem specific ACL. The GPFS libraries must be installed in the standard location. To know if the GPFS support is available on your system, the following commands can be used.

*setdebug level=1 client=stretch-amd64-fd

Connecting to Client stretch-amd64-fd at stretch-amd64:9102

2000 OK setdebug=1 trace=0 hangup=0 blowup=0 options= tags=

*st client=stretch-amd64-fd

Connecting to Client stretch-amd64-fd at stretch-amd64:9102

stretch-amd64-fd Version: 11.0.0 (01 Dec 2020) x86_64-pc-linux-gnu-bacula-enterprise debian 9.11

Daemon started 21-Jul-20 14:42. Jobs: run=0 running=0.

Ulimits: nofile=1024 memlock=65536 status=ok

Heap: heap=135,168 smbytes=199,993 max_bytes=200,010 bufs=104 max_bufs=105

Sizes: boffset_t=8 size_t=8 debug=1 trace=0 mode=0,2010 bwlimit=0kB/s

Crypto: fips=no crypto=OpenSSL 1.0.2u 20 Dec 2019

APIs: GPFS

Plugin: bpipe-fd.so(2)

The APIs line will indicate if the /usr/lpp/mmfs/libgpfs.so was loaded at the start of the Bacula FD service or not.

The standard ACL Support (cf (here)) directive can be used to enable automatically the support for the GPFS ACL backup.

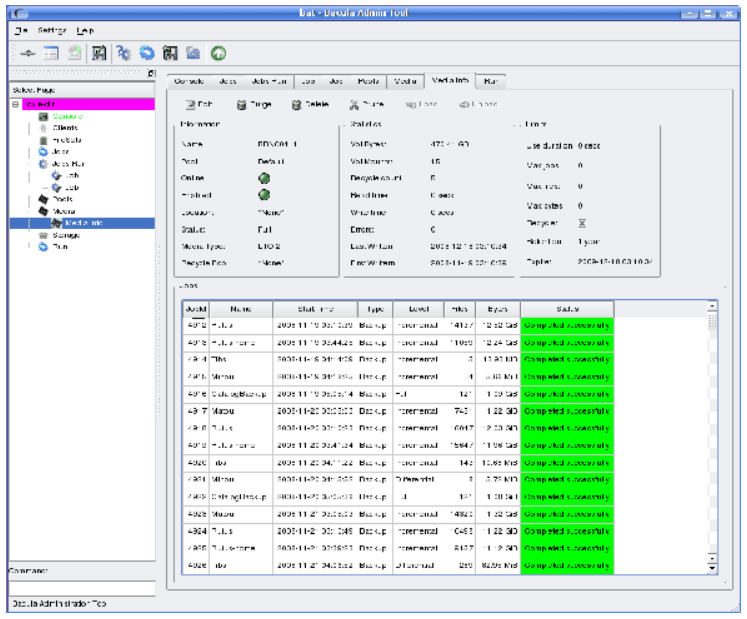

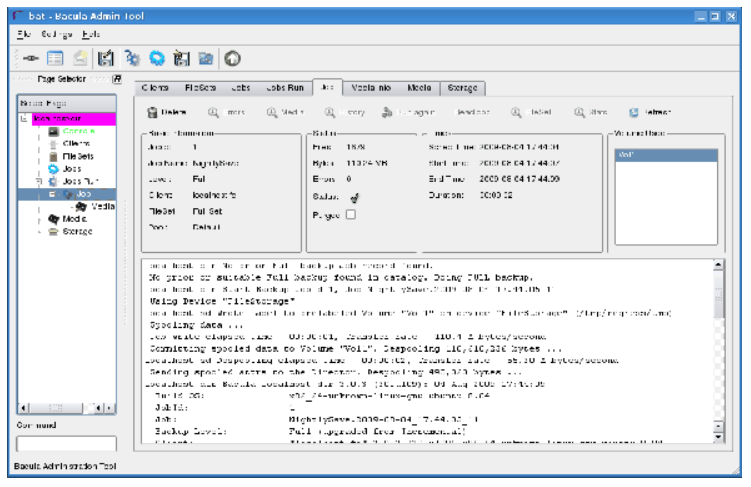

New Baculum Features

Multi-user interface improvements

There have been added new functions and improvements to the multi-user interface and restricted access.

The Security page has new tabs:

Console ACLs

OAuth2 clients

API hosts

These new tabs help to configure OAuth2 accounts, create restricted Bacula Console for users and create API hosts. They ease the process of creating users with a restricted Bacula resources access.

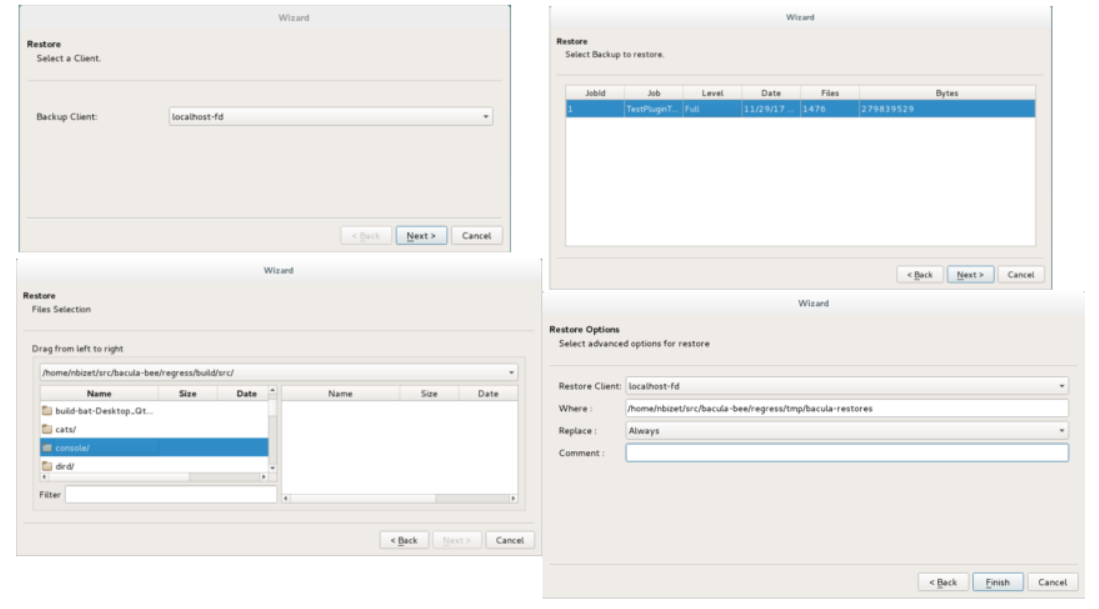

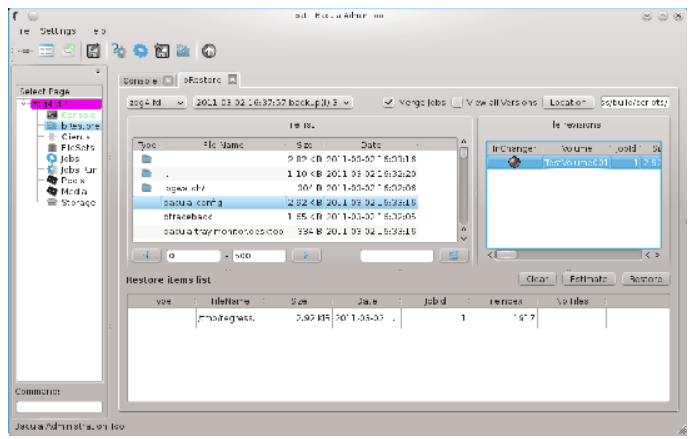

Add searching jobs by filename in the restore wizard

In the restore wizard now is possible to select job to restore by filename of file stored in backups. There is also possible to limit results to specific path.

Show more detailed job file list

The job file list now displays file details like: file attributes, UID, GID, size, mtime and information if the file record for saved or deleted file.

Add graphs to job view page

On the job view page, new pie and bar graphs for selected job are available.

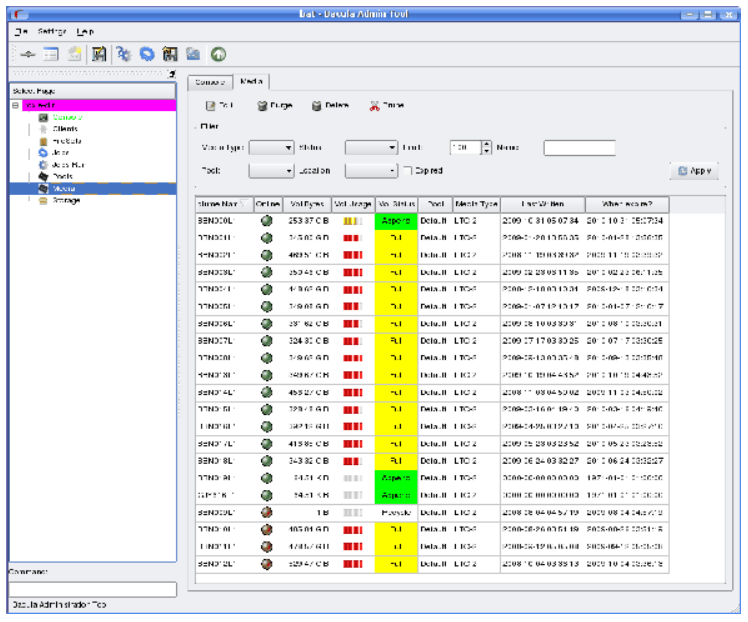

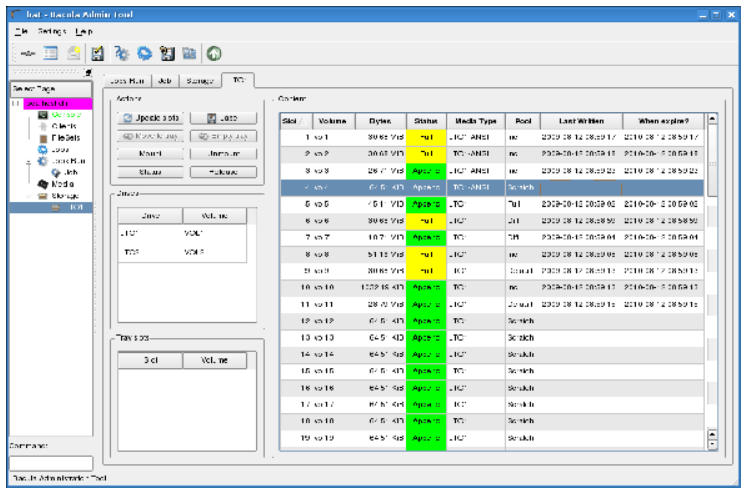

Implement graphical status storage

On the storage status page are available two new types of the status (raw and graphical). The graphical status page is modern and refreshed asynchronously.

Add Russian translations

Global messages log window

There has been added new window to browse Bacula logs in a friendly way.

Job status weather

Add the job status weather on job list page to express current job condition.

Restore wizard improvements

In the restore wizard has been added listing and browsing names encoded in non-UTF encoding.

New API endpoints

/oauth2/clients

/oauth2/clients/client_id

/jobs/files

New parameters in API endpoints

/jobs/jobid/files - ‘details’ parameter

/storages/show - ‘output’ parameter

/storages/storageid/show - ‘output’ parameter

New Features in 9.6.0

Building 9.6.4 and later

Version 9.6.4 is a major security and bug fix release. We suggest everyone to upgrade as soon as possible.

One significant improvement in this version is for the AWS S3 cloud driver. First the code base has been brought much closer to the Enterprise version (still a long ways to go). Second major change is that the community code now uses the latest version of libs3 as maintained by Bacula Systems. The libs3 code is available as a tar file for Bacula version 9.6.4 at:

Note: Version 9.6.4 must be compiled with the above libs3 version or later. To build libs3:

Remove any libs3 package loaded by your OS

Download above link

tar xvfz libs3-20200523.tar.gz

cd libs3-20200523

make # should have no errors

sudo make install

Then when you do your Bacula ./configure <args> it should automatically detect and use the libs3. The output from the ./configure will show whether or not libs3 was found during the configuration. E.g.

S3 support: yes

in the output from ./configure.

Docker Plugin

Containers is a relatively new system level virtualization concept that has less overhead than traditional virtualation. This is true because Container use the underlying operating system to provide all the needed services thus eliminating the need for multiple operating systems.

Docker containers rely on sophisticated file system level data abstraction with a number of read-only images to create templates used for container initialization.

With its Docker Plugin, the Bacula will save the full container image including all read-only and writable layers into a single image archive.

It is not necessary to install a Bacula File daemon in each container, so each container can be backed up from a common image repository.

The Bacula Docker Plugin will contact the Docker service to read and save the contents of any system image or container image using snapshots (default behavior) and dump them using the Docker API.

The Docker Plugin chapter provides more detailed information.

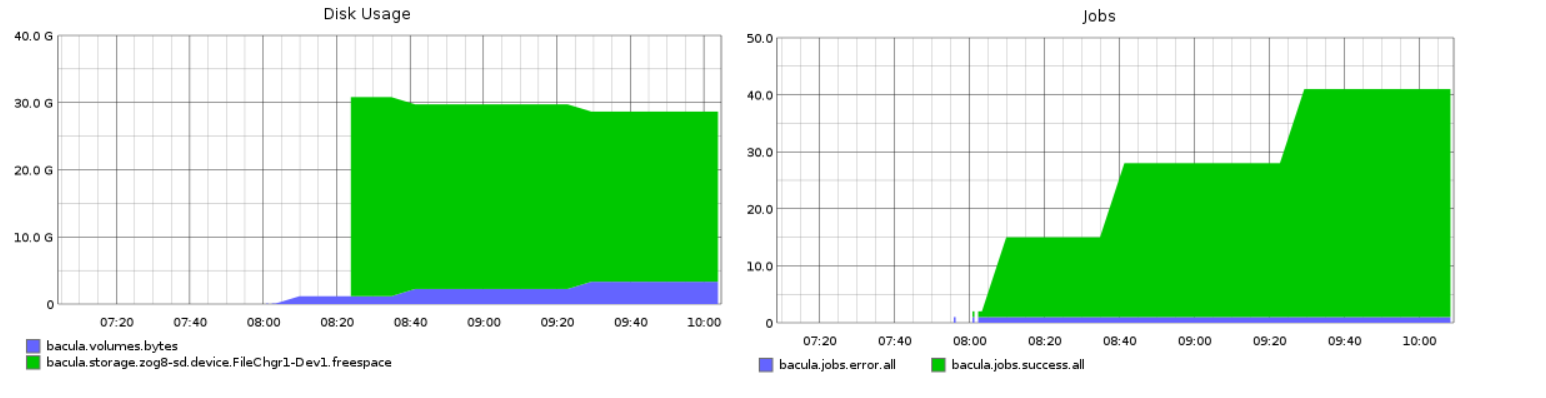

Real-Time Statistics Monitoring

All Bacula daemons can now collect internal performance statistics periodically and provide mechanisms to store the values to a CSV file or to send the values to a Graphite daemon via the network. Graphite is an enterprise-ready monitoring tool (https://graphiteapp.org).

To activate the statistic collector feature, simply define a Statistics resource in the daemon of your choice:

Statistics {

Name = "Graphite"

Type = Graphite

# Graphite host information

Host = "localhost"

Port = 2003

}

It is possible to change the interval that is used to collect the statistics with the Interval directive (5 mins by default), and use the Metrics directive to select the data to collect (all by default).

If the Graphite daemon cannot be reached, the statistics data are spooled on disk and are sent automatically when the Graphite daemon is available again.

The bconsole statistics command can be used to display the current statistics in various formats (text or json for now).

*statistics

Statistics available for:

1: Director

2: Storage

3: Client

Select daemon type for statistics (1-3): 1

bacula.dir.config.clients=1

bacula.dir.config.jobs=3

bacula.dir.config.filesets=2

bacula.dir.config.pools=3

bacula.dir.config.schedules=2

...

*statistics storage

...

bacula.storage.bac-sd.device.File1.readbytes=214

bacula.storage.bac-sd.device.File1.readtime=12

bacula.storage.bac-sd.device.File1.readspeed=0.000000

bacula.storage.bac-sd.device.File1.writespeed=0.000000

bacula.storage.bac-sd.device.File1.status=1

bacula.storage.bac-sd.device.File1.writebytes=83013529

bacula.storage.bac-sd.device.File1.writetime=20356

...

The statistics bconsole command can accept parameters to be scripted, for example it is possible to export the data in JSON, or to select which metrics to display.

*statistics bacula.dir.config.clients bacula.dir.config.jobs json

[

{

"name": "bacula.dir.config.clients",

"value": 1,

"type": "Integer",

"unit": "Clients",

"description": "The number of defined clients in the Director."

},

{

"name": "bacula.dir.config.jobs",

"value": 3,

"type": "Integer",

"unit": "Jobs",

"description": "The number of defined jobs in the Director."

}

]

The .status statistics command can be used to query the status of the Statistic collector thread.

*.status dir statistics

Statistics backend: Graphite is running

type=2 lasttimestamp=12-Sep-18 09:45

interval=300 secs

spooling=in progress

lasterror=Could not connect to localhost:2003 Err=Connection refused

Update Statistics: running interval=300 secs lastupdate=12-Sep-18 09:45

*

New Features in 9.4.0

Cloud Backup

A major problem of Cloud backup is that data transmission to and from the Cloud is very slow compared to traditional backup to disk or tape. The Bacula Cloud drivers provide a means to quickly finish the backups and then to transfer the data from the local cache to the Cloud in the background. This is done by first splitting the data Volumes into small parts that are cached locally then uploading those parts to the Cloud storage service in the background, either while the job continues to run or after the backup Job has terminated. Once the parts are written to the Cloud, they may either be left in the local cache for quick restores or they can be removed (truncate cache).

Cloud Volume Architecture

Note: Regular Bacula disk Volumes are implemented as standard files that reside in the user defined Archive Directory. On the other hand, Bacula Cloud Volumes are directories that reside in the user defined Archive Directory. Each Cloud Volume’s directory contains the cloud Volume parts which are implemented as numbered files (part.1, part.2, …).

Cloud Restore

During a restore, if the needed parts are in the local cache, they will be immediately used, otherwise, they will be downloaded from the Cloud as needed. The restore starts with parts already in the local cache but will wait in turn for any part that needs to be downloaded. The Cloud part downloads proceed while the restore is running.

With most Cloud providers, uploads are usually free of charge, but downloads of data from the Cloud are billed. By using local cache and multiple small parts, you can configure Bacula to substantially reduce download costs.

The MaximumFileSize Device directive is still valid within the Storage Daemon and defines the granularity of a restore chunk. In order to limit volume parts to download during restore (specially when restoring single files), it might be useful to set the MaximumFileSize to a value smaller than or equal to the MaximumPartSize.

Compatibility

Since a Cloud Volume contains the same data as an ordinary Bacula Volume, all existing types of Bacula data may be stored in the cloud - that is client encrypted, compressed data, plugin data, etc. All existing Bacula functionality, with the exception of deduplication, is available with the Bacula Cloud drivers.

Deduplication and the Cloud

At the current time, Bacula Global Endpoint Backup does not support writing to the cloud because the cloud would be too slow to support large hashed and indexed containers of deduplication data.

Virtual Autochangers and Disk Autochangers

If you use a Bacula Virtual Autochanger you will find it compatible with the new Bacula Cloud drivers. However, if you use a third party disk autochanger script such as Vchanger, unless or until it is modified to handle Volume directories, it may not be compatible with Bacula Cloud drivers.

Security

All data that is sent to and received from the cloud by default uses the HTTPS protocol, so your data is encrypted while being transmitted and received. However, data that resides in the Cloud is not encrypted by default. If you wish extra security of your data while it resides in the cloud, you should consider using Bacula’s PKI data encryption feature during the backup.

Cache and Pruning

The Cache is treated much like a normal Disk based backup, so that in configuring Cloud the administrator should take care to set “Archive Device” in the Device resource to a directory where he/she would normally start data backed up to disk. Obviously, unless he/she uses the truncate/prune cache commands, the Archive Device will continue to fill.

The cache retention can be controlled per Volume with the CacheRetention attribute. The default value is 0, meaning that the pruning of the cache is disabled.

The CacheRetention value for a volume can be modified with the update command or via the Pool directive CacheRetention for newly created volumes.

New Commands, Resource, and Directives for Cloud

To support Cloud, in Bacula Enterprise 8.8 there are new bconsole commands, new Storage Daemon directives and a new Cloud resource that is specified in the Storage Daemon’s Device resource.

New Cloud Bconsole Commands

Cloud The new cloud bconsole command allows you to do a number of things with cloud volumes. The options are the following:

None. If you specify no arguments to the command, bconsole will prompt with:

Cloud choice:

1: List Cloud Volumes in the Cloud

2: Upload a Volume to the Cloud

3: Prune the Cloud Cache

4: Truncate a Volume Cache

5: Done

Select action to perform on Cloud (1-5):

The different choices should be rather obvious.

Truncate This command will attempt to truncate the local cache for the specified Volume. Bacula will prompt you for the information needed to determine the Volume name or names. To avoid the prompts, the following additional command line options may be specified:

Storage=xxx

Volume=xxx

AllPools

AllFromPool

Pool=xxx

MediaType=xxx

Drive=xxx

Slots=nnn

Prune This command will attempt to prune the local cache for the specified Volume. Bacula will respect the CacheRetention volume attribute to determine if the cache can be truncated or not. Only parts that are uploaded to the cloud will be deleted from the cache. Bacula will prompt you for the information needed to determine the Volume name or names. To avoid the prompts, the following additional command line options may be specified:

Storage=xxx

Volume=xxx

AllPools

AllFromPool

Pool=xxx

MediaType=xxx

Drive=xxx

Slots=nnn

Upload This command will attempt to upload the specified Volumes. It will prompt you for the information needed to determine the Volume name or names. To avoid the prompts, you may specify any of the following additional command line options:

Storage=xxx

Volume=xxx

AllPools

AllFromPool

Pool=xxx

MediaType=xxx

Drive=xxx

Slots=nnn

List This command will list volumes stored in the Cloud. If a volume name is specified, the command will list all parts for the given volume. To avoid the prompts, you may specify any of the following additional command line options:

Storage=xxx

Volume=xxx

Storage=xxx

Cloud Additions to the DIR Pool Resource

Within the bacula-dir.conf file each Pool resource there is an additional keyword CacheRetention that can be specified.

Cloud Additions to the SD Device Resource

Within the bacula-sd.conf file each Device resource there is an additional keyword Cloud that must be specified on the Device Type directive, and two new directives Maximum Part Size and Cloud.

New Cloud SD Device Directives

Device Type The Device Type has been extended to include the new keyword Cloud to specify that the device supports cloud Volumes. Example:

Device Type = Cloud

Cloud The new Cloud directive permits specification of a new Cloud Resource. As with other Bacula resource specifications, one specifies the name of the Cloud resource. Example:

Cloud = S3Cloud

Maximum Part Size This directive allows one to specify the maximum size for each part. Smaller part sizes will reduce restore costs, but may require a small additional overhead to handle multiple parts. The maximum number of parts permitted in a Cloud Volume is 524,288. The maximum size of any given part is approximately 17.5TB.

Example Cloud Device Specification

An example of a Cloud Device Resource might be:

Device {

Name = CloudStorage

Device Type = Cloud

Cloud = S3Cloud

Archive Device = /opt/bacula/backups

Maximum Part Size = 10000000

Media Type = CloudType

LabelMedia = yes

Random Access = Yes;

AutomaticMount = yes

RemovableMedia = no

AlwaysOpen = no

}

As you can see from the above, the Cloud directive in the Device resource contains the name (S3Cloud) of the Cloud resource that is shown below.

Note also the Archive Device is specified in the same manner as one would use for a File device. However, in place of containing files with Volume names, the archive device for the Cloud drivers will contain the local cache, which consists of directories with the Volume name; and these directories contain the parts associated with the particular Volume. So with the above Device resource, and the two cache Volumes shown in figure fig:cloud0ay2 above would have the following layout on disk:

/opt/bacula/backups

/opt/bacula/backups/Volume0001

/opt/bacula/backups/Volume0001/part.1

/opt/bacula/backups/Volume0001/part.2

/opt/bacula/backups/Volume0001/part.3

/opt/bacula/backups/Volume0001/part.4

/opt/bacula/backups/Volume0002

/opt/bacula/backups/Volume0002/part.1

/opt/bacula/backups/Volume0002/part.2

/opt/bacula/backups/Volume0002/part.3

The Cloud Resource

The Cloud resource has a number of directives that may be specified as exemplified in the following example:

default east USA location:

Cloud {

Name = S3Cloud

Driver = "S3"

HostName = "s3.amazonaws.com"

BucketName = "BaculaVolumes"

AccessKey = "BZIXAIS39DP9YNER5DFZ"

SecretKey = "beesheeg7iTe0Gaexee7aedie4aWohfuewohGaa0"

Protocol = HTTPS

UriStyle = VirtualHost

Truncate Cache = No

Upload = EachPart

Region = "us-east-1"

MaximumUploadBandwidth = 5MB/s

}

central europe location:

Cloud {

Name = S3Cloud

Driver = "S3"

HostName = "s3-eu-central-1.amazonaws.com"

BucketName = "BaculaVolumes"

AccessKey = "BZIXAIS39DP9YNER5DFZ"

SecretKey = "beesheeg7iTe0Gaexee7aedie4aWohfuewohGaa0"

Protocol = HTTPS

UriStyle = VirtualHost

Truncate Cache = No

Upload = EachPart

Region = "eu-central-1"

MaximumUploadBandwidth = 4MB/s

}

For Amazon Cloud, refer to http://docs.aws.amazon.com/general/latest/gr/rande.html#s3_region to get a complete list of regions and corresponding endpoints and use them respectively as Region and HostName directive.

For CEPH S3 interface:

Cloud {

Name = CEPH_S3

Driver = "S3"

HostName = ceph.mydomain.lan

BucketName = "CEPHBucket"

AccessKey = "xxxXXXxxxx"

SecretKey = "xxheeg7iTe0Gaexee7aedie4aWohfuewohxx0"

Protocol = HTTPS

Upload = EachPart

UriStyle = Path # Must be set for CEPH

}

The directives of the above Cloud resource for the S3 driver are defined as follows:

Name = Device-Name

The name of the Cloud resource. This is the logical Cloud name, and may be any string up to 127 characters in length. Shown as S3Cloud above.

Description = Text

The description is used for display purposes as is the case with all resource.

Driver = DriverName

This defines which driver to use. It can be S3. There is also a File driver, which is used mostly for testing.

Host Name = Name

This directive specifies the hostname to be used in the URL. Each Cloud service provider has a different and unique hostname. The maximum size is 255 characters and may contain a tcp port specification.

Bucket Name = Name

This directive specifies the bucket name that you wish to use on the Cloud service. This name is normally a unique name name that identifies where you want to place your Cloud Volume parts. With Amazon S3, the bucket must be created previously on the Cloud service. The maximum bucket name size is 255 characters.

Access Key = String

The access key is your unique user identifier given to you by your cloud service provider.

Secret Key = String

The secret key is the security key that was given to you by your cloud service provider. It is equivalent to a password.

Protocol = HTTP | HTTPS

The protocol defines the communications protocol to use with the cloud service provider. The two protocols currently supported are: HTTPS and HTTP. The default is HTTPS.

Uri Style = VirtualHost | Path

This directive specifies the URI style to use to communicate with the cloud service provider. The two Uri Styles currently supported are: VirtualHost and Path. The default is VirtualHost.

Truncate Cache = Truncate-kw

This directive specifies when Bacula should automatically remove (truncate) the local cache parts. Local cache parts can only be removed if they have been uploaded to the cloud. The currently implemented values are:

No Do not remove cache. With this option you must manually delete the cache parts with a bconsole Truncate Cache command, or do so with an Admin Job that runs an Truncate Cache command. This is the default. AfterUpload Each part will be removed just after it is uploaded. Note, if this option is specified, all restores will require a download from the Cloud. Note: Not yet implemented. AtEndOfJob With this option, at the end of the Job, every part that has been uploaded to the Cloud will be removed (truncated). Note: Not yet implemented.

Upload = Upload-kw

This directive specifies when local cache parts will be uploaded to the Cloud. The options are:

No Do not upload cache parts. With this option you must manually upload the cache parts with a bconsole Upload command, or do so with an Admin Job that runs an Upload command. This is the default. EachPart With this option, each part will be uploaded when it is complete i.e. when the next part is created or at the end of the Job. AtEndOfJob With this option all parts that have not been previously uploaded will be uploaded at the end of the Job. Note: Not yet implemented.

Maximum Upload Bandwidth = speed

The default is unlimited, but by using this directive, you may limit the upload bandwidth used globally by all devices referencing this Cloud resource.

Maximum Download Bandwidth = speed

The default is unlimited, but by using this directive, you may limit the download bandwidth used globally by all devices referencing this Cloud resource.

Region = String

The Cloud resource can be configured to use a specific endpoint within a region. This directive is required for AWS-V4 regions. ex: Region=”eu-central-1”

File Driver for the Cloud

As mentioned above, one may specify the keyword File on the Driver directive of the Cloud resource. Instead of writing to the Cloud, Bacula will instead create a Cloud Volume but write it to disk. The rest of this section applies to the Cloud resource directives when the File driver is specified.

The following Cloud directives are ignored: Bucket Name, Access Key, Secret Key, Protocol, Uri Style. The directives Truncate Cache and Upload work on the local cache in the same manner as they do for the S3 driver.

The main difference to note is that the Host Name, specifies the destination directory for the Cloud Volume files, and this Host Name must be different from the Archive Device name, or there will be a conflict between the local cache (in the Archive Device directory) and the destination Cloud Volumes (in the Host Name directory).

As noted above, the File driver is mostly used for testing purposes, and we do not particularly recommend using it. However, if you have a particularly slow backup device you might want to stage your backup data into an SSD or disk using the local cache feature of the Cloud device, and have your Volumes transferred in the background to a slow File device.

WORM Tape Support

Automatic WORM (Write Once Read Multiple) tapes detection has been added in 10.2.

When a WORM tape is detected, the catalog volume entry is changed automatically to set Recycle=no. It will prevent the volume from being automatically recycled by Bacula.

There is no change in how the Job and File records are pruned from the catalog as that is a separate issue that is currently adequately implemented in Bacula.

When a WORM tape is detected, the SD will show WORM on the device state output (must have debug greater or equal to 6) otherwise the status shows as !WORM

Device state:

OPENED !TAPE LABEL APPEND !READ !EOT !WEOT !EOF WORM !SHORT !MOUNTED ...

The output of the used volume status has been modified to include the worm state. It shows worm=1 for a worm cassette and worm=0 otherwise. Example:

Used Volume status:

Reserved volume: TestVolume001 on Tape device "nst0" (/dev/nst0)

Reader=0 writers=0 reserves=0 volinuse=0 worm=1

The following programs are needed for the WORM tape detection:

sdparm

tapeinfo

The new Storage Device directive Worm Command must be configured as well as the Control Device directive (used with the Tape Alert feature).

Device {

Name = "LTO-0"

Archive Device = "/dev/nst0"

Control Device = "/dev/sg0" # from lsscsi -g

Worm Command = "/opt/bacula/scripts/isworm %l"

...

}

New Features in 9.2.0

This chapter describes new features that have been added to the current version of Bacula in version 9.2.0

In general, this is a fairly substantial release because it contains a very large number of bug fixes backported from the Bacula Enterprise version. There are also a few new features backported from Bacula Enterprise.

Enhanced Autochanger Support

Note: this feature was actually backported into version 9.0.0, but the documentation was added much after the 9.0.0 release. To call your attention to this new feature, we have also included the documentation here.

To make Bacula function properly with multiple Autochanger definitions, in the Director’s configuration, you must adapt your bacula-dir.conf Storage directives.

Each autochanger that you have defined in an Autochanger resource in the Storage daemon’s bacula-sd.conf file, must have a corresponding Autochanger resource defined in the Director’s bacula-dir.conf file. Normally you will already have a Storage resource that points to the Storage daemon’s Autochanger resource. Thus you need only to change the name of the Storage resource to Autochanger. In addition the Autochanger = yes directive is not needed in the Director’s Autochanger resource, since the resource name is Autochanger, the Director already knows that it represents an autochanger.

In addition to the above change (Storage to Autochanger), you must modify any additional Storage resources that correspond to devices that are part of the Autochanger device. Instead of the previous Autochanger = yes directive they should be modified to be Autochanger = xxx where you replace the xxx with the name of the Autochanger.

For example, in the bacula-dir.conf file:

Autochanger { # New resource

Name = Changer-1

Address = cibou.company.com

SDPort = 9103

Password = "xxxxxxxxxx"

Device = LTO-Changer-1

Media Type = LTO-4

Maximum Concurrent Jobs = 50

}

Storage {

Name = Changer-1-Drive0

Address = cibou.company.com

SDPort = 9103

Password = "xxxxxxxxxx"

Device = LTO4_1_Drive0

Media Type = LTO-4

Maximum Concurrent Jobs = 5

Autochanger = Changer-1 # New directive

}

Storage {

Name = Changer-1-Drive1

Address = cibou.company.com

SDPort = 9103

Password = "xxxxxxxxxx"

Device = LTO4_1_Drive1

Media Type = LTO-4

Maximum Concurrent Jobs = 5

Autochanger = Changer-1 # New directive

}

...

Note that Storage resources Changer-1-Drive0 and Changer-1-Drive1 are not required since they make up part of an autochanger, and normally, Jobs refer only to the Autochanger resource. However, by referring to those Storage definitions in a Job, you will use only the indicated drive. This is not normally what you want to do, but it is very useful and often used for reserving a drive for restores. See the Storage daemon example .conf below and the use of AutoSelect = no.

So, in summary, the changes are:

Change Storage to Autochanger in the LTO4 resource.

Remove the Autochanger = yes from the Autochanger LTO4 resource.

Change the Autochanger = yes in each of the Storage device that belong to the Autochanger to point to the Autochanger resource with for the example above the directive Autochanger = LTO4.

Please note that if you define two different autochangers, you must give a unique Media Type to the Volumes in each autochanger. More specifically, you may have multiple Media Types, but you cannot have Volumes with the same Media Type in two different autochangers. If you attempt to do so, Bacula will most likely reference the wrong autochanger (Storage) and not find the correct Volume.

New Prune Command Option

The bconsole prune command can now run the pruning algorithm on all volumes from a Pool or on all Pools.

prune allfrompool pool=Default yes

prune allfrompool allpools yes

BConsole Features

Delete a Client

The delete client bconsole command delete the database record of a client that is no longer defined in the configuration file. It also removes all other records (Jobs, Files, …) associated with the client that is deleted.

Status Schedule Enhancements

The status schedule command can now accept multiple client or job keywords on the command line. The limit parameter is disabled when the days parameter is used. The output is now ordered by day.

Restore option noautoparent

During a bconsole restore session, parent directories are automatically selected to avoid issues with permissions. It is possible to disable this feature with the noautoparent command line parameter.

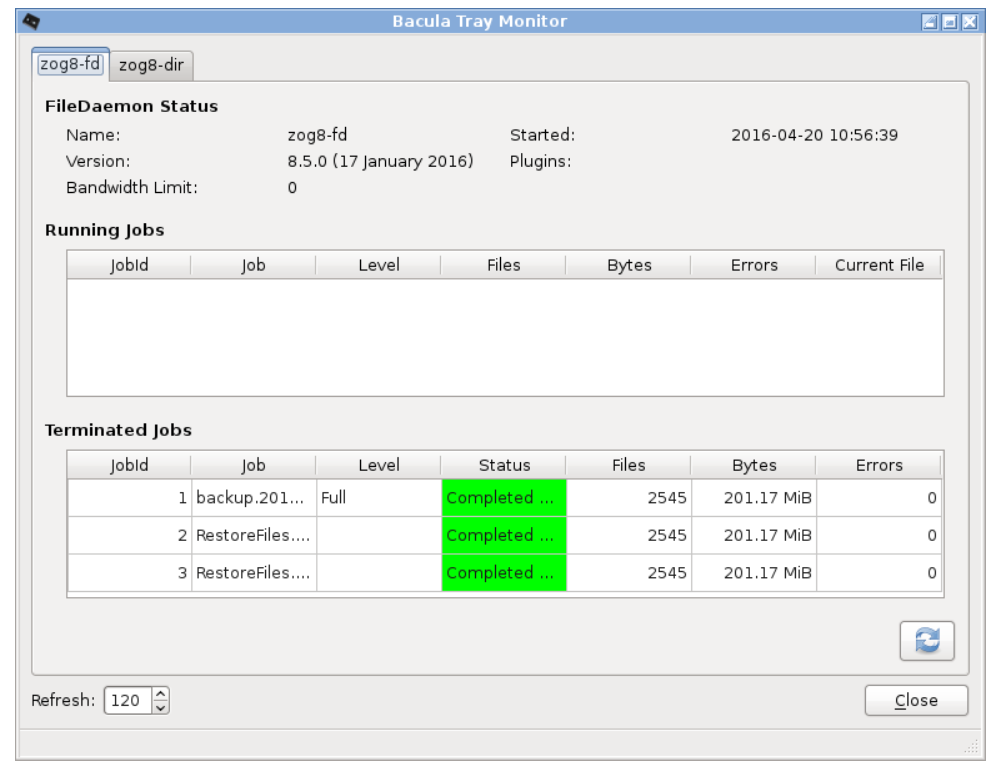

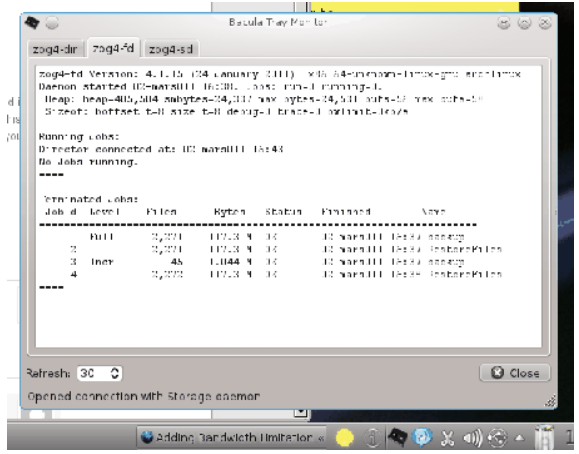

Tray Monitor Restore Screen

It is now possible to restore files from the Tray Monitor GUI program.

New Features in 9.0.0

This chapter describes new features that have been added to the current version of Bacula in version 9.0.0

Enhanced Autochanger Support

To make Bacula function properly with multiple Autochanger definitions, in the Director’s configuration, you must adapt your bacula-dir.conf Storage directives.

Each autochanger that you have defined in an Autochanger resource in the Storage daemon’s bacula-sd.conf file, must have a corresponding Autochanger resource defined in the Director’s bacula-dir.conf file. Normally you will already have a Storage resource that points to the Storage daemon’s Autochanger resource. Thus you need only to change the name of the Storage resource to Autochanger. In addition the Autochanger = yes directive is not needed in the Director’s Autochanger resource, since the resource name is Autochanger, the Director already knows that it represents an autochanger.

In addition to the above change (Storage to Autochanger), you must modify any additional Storage resources that correspond to devices that are part of the Autochanger device. Instead of the previous Autochanger = yes directive they should be modified to be Autochanger = xxx where you replace the xxx with the name of the Autochanger.

For example, in the bacula-dir.conf file:

Autochanger { # New resource

Name = Changer-1

Address = cibou.company.com

SDPort = 9103

Password = "xxxxxxxxxx"

Device = LTO-Changer-1

Media Type = LTO-4

Maximum Concurrent Jobs = 50

}

Storage {

Name = Changer-1-Drive0

Address = cibou.company.com

SDPort = 9103

Password = "xxxxxxxxxx"

Device = LTO4_1_Drive0

Media Type = LTO-4

Maximum Concurrent Jobs = 5

Autochanger = Changer-1 # New directive

}

Storage {

Name = Changer-1-Drive1

Address = cibou.company.com

SDPort = 9103

Password = "xxxxxxxxxx"

Device = LTO4_1_Drive1

Media Type = LTO-4

Maximum Concurrent Jobs = 5

Autochanger = Changer-1 # New directive

}

...

Note that Storage resources Changer-1-Drive0 and Changer-1-Drive1 are not required since they make up part of an autochanger, and normally, Jobs refer only to the Autochanger resource. However, by referring to those Storage definitions in a Job, you will use only the indicated drive. This is not normally what you want to do, but it is very useful and often used for reserving a drive for restores. See the Storage daemon example .conf below and the use of AutoSelect = no.

So, in summary, the changes are:

Change Storage to Autochanger in the LTO4 resource.

Remove the Autochanger = yes from the Autochanger LTO4 resource.

Change the Autochanger = yes in each of the Storage device that belong to the Autochanger to point to the Autochanger resource with for the example above the directive Autochanger = LTO4.

Source Code for Windows

With this version of Bacula, we have included the old source code for Windows and also updated it to contain the code from the latest Bacula Enterprise version. The project is also directly distributing binaries for Windows rather than relying on Bacula Systems to supply them.

Maximum Virtual Full Interval Option

Two new director directives have been added: Max Virtual Full Interval and Virtual Full Backup Pool.

The Max Virtual Full Interval directive should behave similar to the Max Full Interval, but for Virtual Full jobs. If Bacula sees that there has not been a Full backup in Max Virtual Full Interval time then it will upgrade the job to Virtual Full. If you have both Max Full Interval and Max Virtual Full Interval set then Max Full Interval should take precedence.

The Virtual Full Backup Pool directive allows one to change the pool as well. You probably want to use these two directives in conjunction with each other but that may depend on the specifics of one’s setup. If you set the Max Full Interval without setting Max Virtual Full Interval then Bacula will use whatever the “default” pool is set to which is the same behavior as with the Max Full Interval.

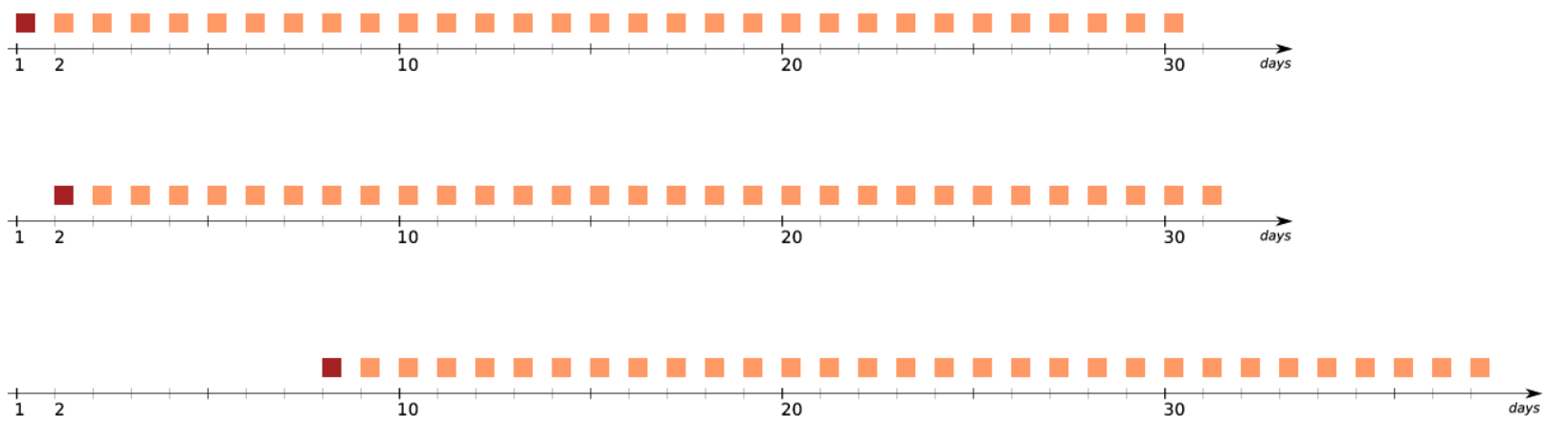

Progressive Virtual Full

In Bacula version 9.0.0, we have added a new Directive named Backups To Keep that permits you to implement Progressive Virtual Fulls within Bacula. Sometimes this feature is known as Incremental Forever with Consolidation.

To implement the Progressive Virtual Full feature, simply add the Backups To Keep directive to your Virtual Full backup Job resource. The value specified on the directive indicates the number of backup jobs that should not be merged into the Virtual Full (i.e. the number of backup jobs that should remain after the Virtual Full has completed. The default is zero, which reverts to a standard Virtual Full than consolidates all the backup jobs that it finds.

Backups To Keep Directive

The new BackupsToKeep directive is specified in the Job Resource and has the form:

Backups To Keep = 30

where the value (30 in the above figure and example) is the number of backups to retain. When this directive is present during a Virtual Full (it is ignored for other Job types), it will look for the most recent Full backup that has more subsequent backups than the value specified. In the above example the Job will simply terminate unless there is a Full back followed by at least 31 backups of either level Differential or Incremental.

Assuming that the last Full backup is followed by 32 Incremental backups, a Virtual Full will be run that consolidates the Full with the first two Incrementals that were run after the Full. The result is that you will end up with a Full followed by 30 Incremental backups. The Job Resource in bacula-dir.conf to accomplish this would be:

Job {

Name = "VFull"

Type = Backup

Level = VirtualFull

Client = "my-fd"

File Set = "FullSet"

Accurate = Yes

Backups To Keep = 10

}

Delete Consolidated Jobs

The new directive Delete Consolidated Jobs expects a yes or no value that if set to yes will cause any old Job that is consolidated during a Virtual Full to be deleted. In the example above we saw that a Full plus one other job (either an Incremental or Differential) were consolidated into a new Full backup. The original Full plus the other Job consolidated will be deleted. The default value is no.

Virtual Full Compatibility

Virtual Full as well as Progressive Virtual Full works with any standard backup Job.

However, it should be noted that Virtual Full jobs are not compatible with any plugins that you may be using.

TapeAlert Enhancements

There are some significant enhancements to the TapeAlert feature of Bacula. Several directives are used slightly differently, which unfortunately causes a compatibility problem with the old TapeAlert implementation. Consequently, if you are already using TapeAlert, you must modify your bacula-sd.conf in order for Tape Alerts to work. See below for the details …

What is New

First, you must define a Alert Command directive in the Device resource that calls the new tapealert script that is installed in the scripts directory (normally: /opt/bacula/scripts). It is defined as follows:

Device {

Name = ...

Archive Device = /dev/nst0

Alert Command = "/opt/bacula/scripts/tapealert %l"

Control Device = /dev/sg1 # must be SCSI ctl for /dev/nst0

...

}

In addition the Control Device directive in the Storage Daemon’s conf file must be specified in each Device resource to permit Bacula to detect tape alerts on a specific devices (normally only tape devices).

Once the above mentioned two directives (Alert Command and Control Device) are in place in each of your Device resources, Bacula will check for tape alerts at two points:

After the Drive is used and it becomes idle.

After each read or write error on the drive.

At each of the above times, Bacula will call the new tapealert script, which uses the tapeinfo program. The tapeinfo utility is part of the apt sg3-utils and rpm sg3_utils packages that must be installed on your systems. Then after each alert that Bacula finds for that drive, Bacula will emit a Job message that is either INFO, WARNING, or FATAL depending on the designation in the Tape Alert published by the T10 Technical Committee on SCSI Storage Interfaces (www.t10.org). For the specification, please see: www.t10.org/ftp/t10/document.02/02-142r0.pdf

As a somewhat extreme example, if tape alerts 3, 5, and 39 are set, you will get the following output in your backup job.

17-Nov 13:37 rufus-sd JobId 1: Error: block.c:287

Write error at 0:17 on device "tape"

(/home/kern/bacula/k/regress/working/ach/drive0)

Vol=TestVolume001. ERR=Input/output error.

17-Nov 13:37 rufus-sd JobId 1: Fatal error: Alert:

Volume="TestVolume001" alert=3: ERR=The operation has stopped because

an error has occurred while reading or writing data which the drive

cannot correct. The drive had a hard read or write error

17-Nov 13:37 rufus-sd JobId 1: Fatal error: Alert:

Volume="TestVolume001" alert=5: ERR=The tape is damaged or the drive

is faulty. Call the tape drive supplier helpline. The drive can no

longer read data from the tape

17-Nov 13:37 rufus-sd JobId 1: Warning: Disabled Device "tape"

(/home/kern/bacula/k/regress/working/ach/drive0) due to tape alert=39.

17-Nov 13:37 rufus-sd JobId 1: Warning: Alert: Volume="TestVolume001"

alert=39: ERR=The tape drive may have a fault. Check for availability

of diagnostic information and run extended diagnostics if applicable.

The drive may have had a failure which may be identified by stored

diagnostic information or by running extended diagnostics (eg Send

Diagnostic). Check the tape drive users manual for instructions on

running extended diagnostic tests and retrieving diagnostic data.

Without the tape alert feature enabled, you would only get the first error message above, which is the error return Bacula received when it gets the error. Notice also, that in the above output the alert number 5 is a critical error, which causes two things to happen. First the tape drive is disabled, and second the Job is failed.

If you attempt to run another Job using the Device that has been disabled, you will get a message similar to the following:

17-Nov 15:08 rufus-sd JobId 2: Warning:

Device "tape" requested by DIR is disabled.

and the Job may be failed if no other drive can be found.

Once the problem with the tape drive has been corrected, you can clear the tape alerts and re-enable the device with the Bacula bconsole command such as the following:

enable Storage=Tape

Note, when you enable the device, the list of prior tape alerts for that drive will be discarded.

Since is is possible to miss tape alerts, Bacula maintains a temporary list of the last 8 alerts, and each time Bacula calls the tapealert script, it will keep up to 10 alert status codes. Normally there will only be one or two alert errors for each call to the tapealert script.

Once a drive has one or more tape alerts, you can see them by using the bconsole status command as follows:

status storage=Tape

which produces the following output:

Device Vtape is "tape" (/home/kern/bacula/k/regress/working/ach/drive0)

mounted with:

Volume: TestVolume001

Pool: Default

Media type: tape

Device is disabled. User command.

Total Bytes Read=0 Blocks Read=1 Bytes/block=0

Positioned at File=1 Block=0

Critical Alert: at 17-Nov-2016 15:08:01 Volume="TestVolume001"

alert=Hard Error

Critical Alert: at 17-Nov-2016 15:08:01 Volume="TestVolume001"

alert=Read Failure

Warning Alert: at 17-Nov-2016 15:08:01 Volume="TestVolume001"

alert=Diagnostics Required

if you want to see the long message associated with each of the alerts, simply set the debug level to 10 or more and re-issue the status command:

setdebug storage=Tape level=10

status storage=Tape

...

Critical Alert: at 17-Nov-2016 15:08:01 Volume="TestVolume001"

flags=0x0 alert=The operation has stopped because an error has occurred

while reading or writing data which the drive cannot correct. The drive had

a hard read or write error

Critical Alert: at 17-Nov-2016 15:08:01 Volume="TestVolume001"

flags=0x0 alert=The tape is damaged or the drive is faulty. Call the tape

drive supplier helpline. The drive can no longer read data from the tape

Warning Alert: at 17-Nov-2016 15:08:01 Volume="TestVolume001" flags=0x1

alert=The tape drive may have a fault. Check for availability of diagnostic

information and run extended diagnostics if applicable. The drive may

have had a failure which may be identified by stored diagnostic information

or by running extended diagnostics (eg Send Diagnostic). Check the tape

drive users manual for instructions on running extended diagnostic tests

and retrieving diagnostic data.

...

The next time you enable the Device by either using bconsole or you restart the Storage Daemon, all the saved alert messages will be discarded.

Handling of Alerts

Tape Alerts numbered 7,8,13,14,20,22,52,53, and 54 will cause Bacula to disable the current Volume.

Tape Alerts numbered 14,20,29,30,31,38, and 39 will cause Bacula to disable the drive.

Please note certain tape alerts such as 14 have multiple effects (disable the Volume and disable the drive).

New Console ACL Directives

By default, if a Console ACL directive is not set, Bacula will assume that the ACL list is empty. If the current Bacula Director configuration uses restricted Consoles and allows restore jobs, it is mandatory to configure the new directives.

DirectoryACL

This directive is used to specify a list of directories that can be accessed by a restore session. Without this directive, a restricted console cannot restore any file. Multiple directories names may be specified by separating them with commas, and/or by specifying multiple DirectoryACL directives. For example, the directive may be specified as:

DirectoryACL = /home/bacula/, "/etc/", "/home/test/*"

With the above specification, the console can access the following directories:

/etc/password/etc/group/home/bacula/.bashrc/home/test/.ssh/config/home/test/Desktop/Images/something.png

But not to the following files or directories:

/etc/security/limits.conf/home/bacula/.ssh/id_dsa.pub/home/guest/something/usr/bin/make

If a directory starts with a Windows pattern (ex: c:/), Bacula will automatically ignore the case when checking directory names.

New Bconsole list Command Behavior

The bconsole list commands can now be used safely from a restricted bconsole session. The information displayed will respect the ACL configured for the Console session. For example, if a restricted Console has access to JobA, JobB and JobC, information about JobD will not appear in the list jobs command.

New Console ACL Directives

It is now possible to configure a restricted Console to distinguish Backup and Restore job permissions. The BackupClientACL can restrict backup jobs on a specific set of clients, while the RestoreClientACL can restrict restore jobs.

# cat /opt/bacula/etc/bacula-dir.conf

...

Console {

Name = fd-cons # Name of the FD Console

Password = yyy

...

ClientACL = localhost-fd # everything allowed

RestoreClientACL = test-fd # restore only

BackupClientACL = production-fd # backup only

}

The ClientACL directive takes precedence over the RestoreClientACL and the BackupClientACL. In the Console resource resource above, it means that the bconsole linked to the Console named “fd-cons” will be able to run:

backup and restore for localhost-fd

backup for production-fd

restore for test-fd

At the restore time, jobs for client localhost-fd, test-fd and production-fd will be available.

If all is set for ClientACL, backup and restore will be allowed for all clients, despite the use of RestoreClientACL or BackupClientACL.

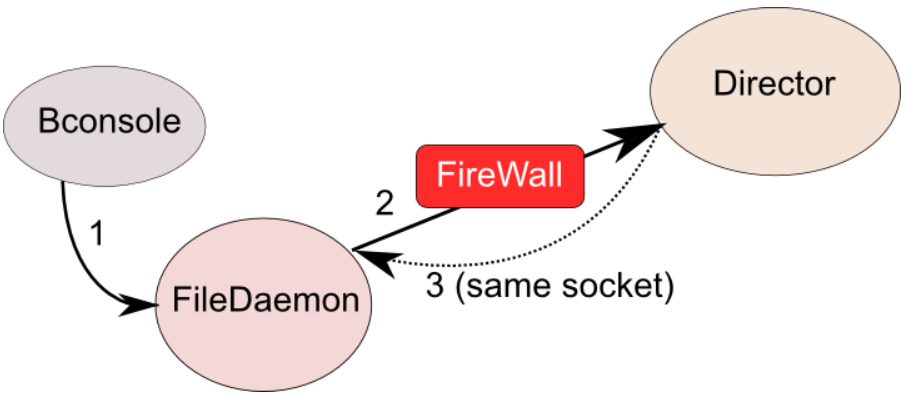

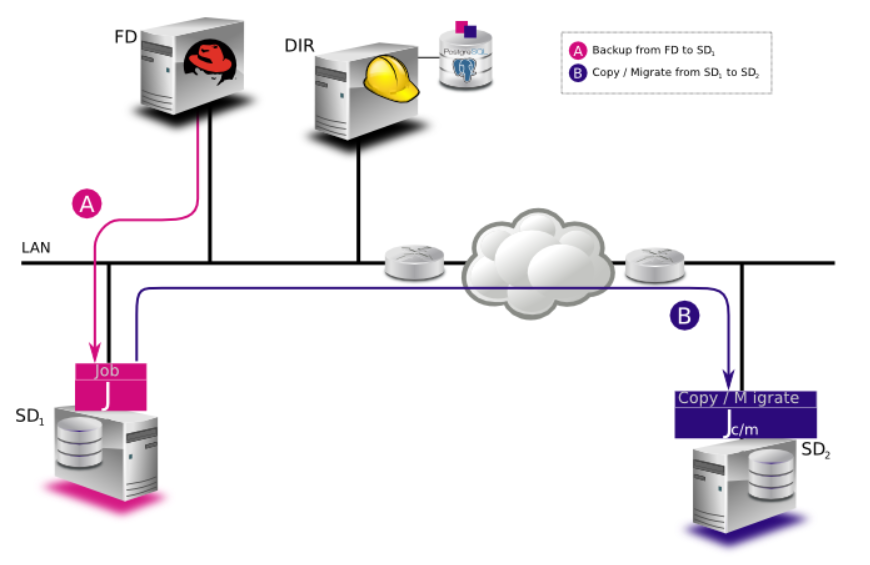

Client Initiated Backup

A console program such as the new tray-monitor or bconsole can now be configured to connect a File Daemon. There are many new features available (see the New Tray Monitor section below), but probably the most important is the ability for the user to initiate a backup of his own machine. The connection established by the FD to the Director for the backup will be used by the Director for the backup, thus not only can clients (users) initiate backups, but a File Daemon that is NATed (cannot be reached by the Director) can now be backed up without using advanced tunneling techniques providing that the File Daemon can connect to the Director.

Configuring Client Initiated Backup

In order to ensure security, there are a number of new directives that must be enabled in the new tray-monitor, the File Daemon and in the Director. A typical configuration might look like the following:

# cat /opt/bacula/etc/bacula-dir.conf

...

Console {

Name = fd-cons # Name of the FD Console

Password = yyy

# These commands are used by the tray-monitor, it is possible to restrict

CommandACL = run, restore, wait, .status, .jobs, .clients

CommandACL = .storages, .pools, .filesets, .defaults, .estimate

# Adapt for your needs

jobacl = *all*

poolacl = *all*

clientacl = *all*

storageacl = *all*

catalogacl = *all*

filesetacl = *all*

}

# cat /opt/bacula/etc/bacula-fd.conf

...

Console { # Console to connect the Director

Name = fd-cons

DIRPort = 9101

address = localhost

Password = "yyy"

}

Director {

Name = remote-cons # Name of the tray monitor/bconsole

Password = "xxx" # Password of the tray monitor/bconsole

Remote = yes # Allow to use send commands to the Console defined

}

cat /opt/bacula/etc/bconsole-remote.conf

....

Director {

Name = localhost-fd

address = localhost # Specify the FD address

DIRport = 9102 # Specify the FD Port

Password = "notused"

}

Console {

Name = remote-cons # Name used in the auth process

Password = "xxx"

}

cat ~/.bacula-tray-monitor.conf

Monitor {

Name = remote-cons

}

Client {

Name = localhost-fd

address = localhost # Specify the FD address

Port = 9102 # Specify the FD Port

Password = "xxx"

Remote = yes

}

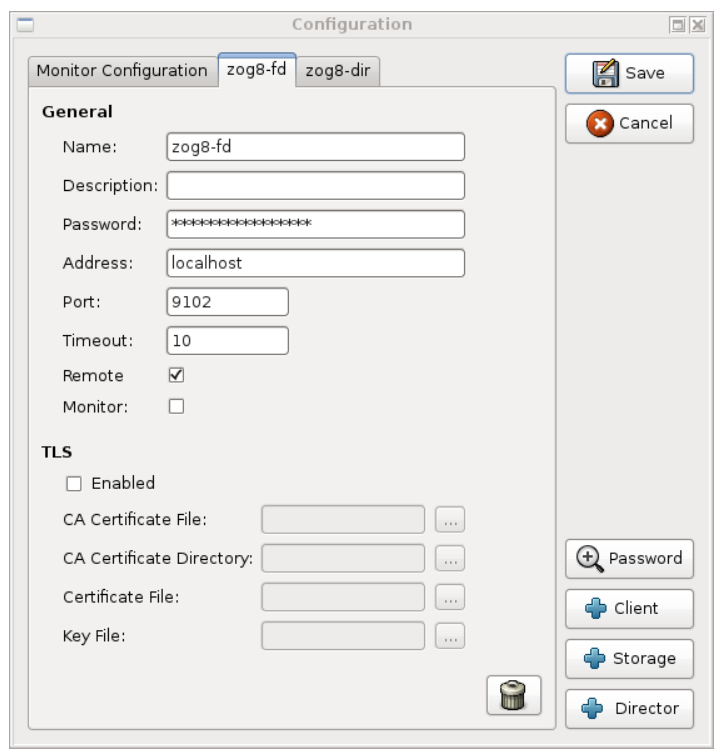

New Tray Monitor

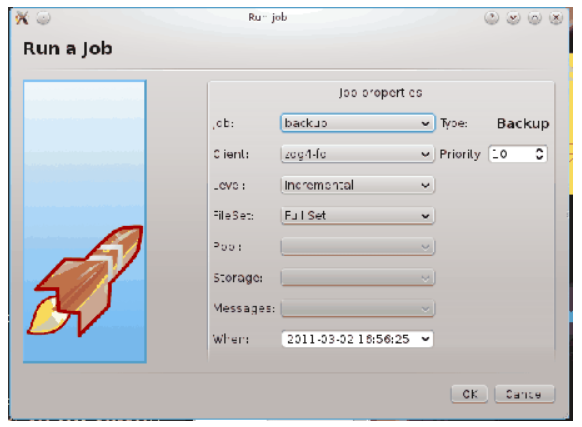

A new tray monitor has been added to the 9.0 release, the tray monitor offers the following features:

Director, File and Storage Daemon status page

Support for the Client Initiated Backup protocol. To use the Client Initiated Backup option from the tray monitor, the Client option Remote should be checked in the configuration.

Wizard to run new job

Display an estimation of the number of files and the size of the next backup job

Ability to configure the tray monitor configuration file directly from the GUI

Ability to monitor a component and adapt the tray monitor task bar icon if a jobs are running.

TLS Support

Better network connection handling

Default configuration file is stored under $HOME/.bacula-tray-monitor.conf

Ability to schedule jobs

Available on Linux and Windows platforms

Schedule Jobs via the Tray Monitor

The Tray Monitor can scan periodically a specific directory Command Directory and process *.bcmd files to find jobs to run.

The format of the file.bcmd command file is the following:

<component name>:<run command>

<component name>:<run command>

...

<component name> = string

<run command> = string (bconsole command line)

For example:

localhost-fd: run job=backup-localhost-fd level=full

localhost-dir: run job=BackupCatalog

The command file should contain at least one command. The component specified in the first part of the command line should be defined in the tray monitor. Once the command file is detected by the tray monitor, a popup is displayed to the user and it is possible for the user to cancel the job directly.

The file can be created with tools such as cron or the task scheduler on Windows. It is possible to verify the network connection at that time to avoid network errors.

#!/bin/sh

if ping -c 1 director &> /dev/null

then

echo "my-dir: run job=backup" > /path/to/commands/backup.bcmd

fi

Accurate Option for Verify Volume Data Job

Since Bacula version 8.4.1, it has been possible to have a Verify Job configured with level=Data that will reread all records from a job and optionally check the size and the checksum of all files. Starting with

Bacula version 9.0, it is now possible to use the accurate option to check catalog records at the same time. When using a Verify job with level=Data and accurate=yes can replace the level=VolumeToCatalog option.

For more information on how to setup a Verify Data job, see label:verifyvolumedata.

To run a Verify Job with the accurate option, it is possible to set the option in the Job definition or set use the accurate=yes on the command line.

* run job=VerifyData jobid=10 accurate=yes

FileDaemon Saved Messages Resource Destination

It is now possible to send the list of all saved files to a Messages resource with the saved message type. It is not recommended to send this flow of information to the director and/or the catalog when the client FileSet is pretty large. To avoid side effects, the all keyword doesn’t include the saved message type. The saved message type should be explicitly set.

# cat /opt/bacula/etc/bacula-fd.conf

...

Messages {

Name = Standard

director = mydirector-dir = all, !terminate, !restored, !saved

append = /opt/bacula/working/bacula-fd.log = all, saved, restored

}

Minor Enhancements

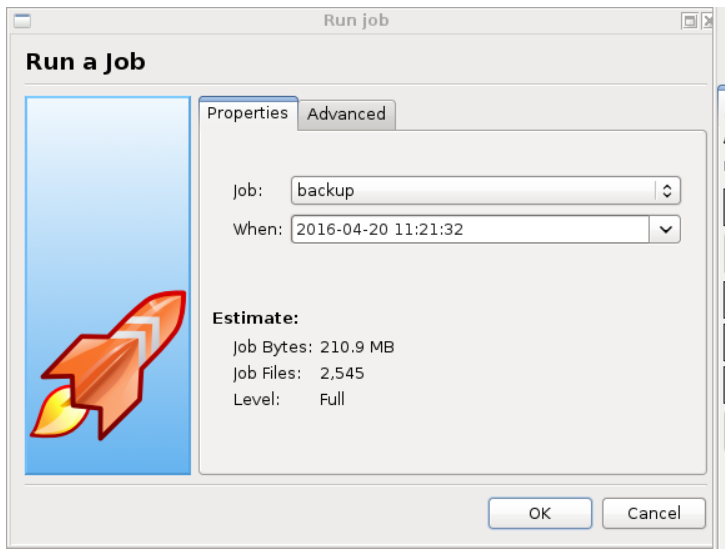

New Bconsole “.estimate” Command

The new .estimate command can be used to get statistics about a job to run. The command uses the database to approximate the size and the number of files of the next job. On a PostgreSQL database, the command uses regression slope to compute values. On MySQL, where these statistical functions are not available, the command uses a simple average estimation. The correlation number is given for each value.

*.estimate job=backup

level=I

nbjob=0

corrbytes=0

jobbytes=0

corrfiles=0

jobfiles=0

duration=0

job=backup

*.estimate job=backup level=F

level=F

nbjob=1

corrbytes=0

jobbytes=210937774

corrfiles=0

jobfiles=2545

duration=0

job=backup

Traceback and Lockdump

After the reception of a signal, traceback and lockdump information are now stored in the same file.

Bconsole list jobs command options

The list jobs bconsole command now accepts new command line options:

joberrors Display jobs with JobErrors

jobstatus=T Display jobs with the specified status code

client=cli Display jobs for a specified client

order=asc/desc Change the output format of the job list. The jobs are sorted by start time and JobId, the sort can use ascendant (asc) or descendant (desc) (default) value.

Minor Enhancements

New Bconsole “Tee All” Command

The @tall command allows logging all input/output from a console session.

*@tall /tmp/log

*st dir

...

Bconsole list jobs command options

The list jobs bconsole command now accepts new command line options:

joberrors Display jobs with JobErrors

jobstatus=T Display jobs with the specified status code

client=cli Display jobs for a specified client

order=asc/desc Change the output format of the job list. The jobs are sorted by start time and JobId, the sort can use ascendant (asc) or descendant (desc) (default) value.

New Bconsole “Tee All” Command

The @tall command allows logging all input/output from a console session.

*@tall /tmp/log

*st dir

...

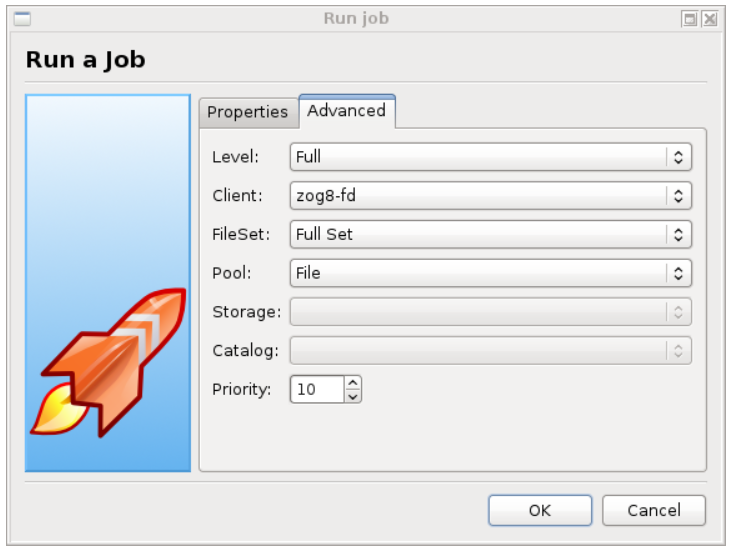

New Job Edit Codes %I

In various places such as RunScripts, you have now access to %I to get the JobId of the copy or migration job started by a migrate job.

Job {

Name = Migrate-Job

Type = Migrate

...

RunAfter = "echo New JobId is %I"

}

.api version 2

In Bacula version 9.0 and later, we introduced a new .api version to help external tools to parse various Bacula bconsole output.

The api_opts option can use the following arguments:

C Clear current options

tn Use a specific time format (1 ISO format, 2 Unix Timestamp, 3 Default Bacula time format)

sn Use a specific separator between items (new line by default).

Sn Use a specific separator between objects (new line by default).

o Convert all keywords to lowercase and convert all non isalpha characters to _

.api 2 api_opts=t1s43S35

.status dir running

==================================

jobid=10

job=AJob

...

New Debug Options

In Bacula version 9.0 and later, we introduced a new options parameter for the setdebug bconsole command.

The following arguments to the new option parameter are available to control debug functions.

0 Clear debug flags

i Turn off, ignore bwrite() errors on restore on File Daemon

d Turn off decomp of BackupRead() streams on File Daemon

t Turn on timestamps in traces

T Turn off timestamps in traces

c Truncate trace file if trace file is activated

l Turn on recoding events on P() and V()

p Turn on the display of the event ring when doing a bactrace

The following command will enable debugging for the File Daemon, truncate an existing trace file, and turn on timestamps when writing to the trace file.

* setdebug level=10 trace=1 options=ct fd

It is now possible to use a class of debug messages called tags to control the debug output of Bacula daemons.

all Display all debug messages

bvfs Display BVFS debug messages

sql Display SQL related debug messages

memory Display memory and poolmem allocation messages

scheduler Display scheduler related debug messages

* setdebug level=10 tags=bvfs,sql,memory

* setdebug level=10 tags=!bvfs

# bacula-dir -t -d 200,bvfs,sql

The tags option is composed of a list of tags. Tags are separated by , or + or - or !. To disable a specific tag, use - or ! in front of the tag. Note that more tags are planned for future versions.

Communication Line Compression

Bacula version 9.0.0 and later now includes communication line compression. It is turned on by default, and if the two Bacula components (Dir, FD, SD, bconsole) are both version 6.6.0 or greater, communication line compression) will be enabled, by default. If for some reason, you do not want communication line compression, you may disable it with the following directive:

Comm Compression = no

This directive can appear in the following resources:

bacula-dir.conf: Director resource

bacula-fd.conf Client (or FileDaemon) resource

bacula-sd.conf: Storage resource

bconsole.conf: Console resource

bat.conf: Console resource

In many cases, the volume of data transmitted across the communications line can be reduced by a factor of three when this directive is enabled (default) In the case that the compression is not effective, Bacula turns it off on a. record by record basis.

If you are backing up data that is already compressed the comm line compression will not be effective, and you are likely to end up with an average compression ratio that is very small. In this case, Bacula reports None in the Job report.

Deduplication Optimized Volumes

This version of Bacula includes a new alternative (or additional) volume format that optimizes the placement of files so that an underlying deduplicating filesystem such as ZFS can optimally deduplicate the backup data that is written by Bacula. These are called Deduplication Optimized Volumes or Aligned Volumes for short. The details of how to use this feature and its considerations are in the Deduplication Optimized Volumes chapter.

This feature is available if you have Bacula Community produced binaries and the Aligned Volumes plugin.

baculabackupreport

I have added a new script called baculabackupreport to the scripts directory. This script was written by Bill Arlofski. It prints a backup summary of the backups that occurred in the prior number of hours specified on the command line. You need to edit the first few lines of the file to ensure that your email address is correct and the database type you are using is correct (default is PostgreSQL). Once you do that, you can manually implement it with:

/opt/bacula/scripts/baculabackupreport 24

I have put the above line in my scripts/delete_catalog_backup script so that it will be mailed to me nightly.

New Message Identification Format

We are starting to add unique message identifiers to each message (other than debug and the Job report) that Bacula prints. At the current time only two files in the Storage Daemon have these message identifiers and over time with subsequent releases we will modify all messages.

The message identifier will be kept unique for each message and once assigned to a message it will not change even if the text of the message changes. This means that the message identifier will be the same no matter what language the text is displayed in, and more importantly, it will allow us to make listing of the messages with in some cases, additional explanation or instructions on how to correct the problem. All this will take several years since it is a lot of work and requires some new programs that are not yet written to manage these message identifiers.

The format of the message identifier is:

[AAnnnn]

where A is an upper case character and nnnn is a four digit number, where the first character indicates the software component (daemon); the second letter indicates the severity, and the number is unique for a given componet and severity.

For example:

[SF0001]

The first character representing the component at the current time one of the following:

S Storage daemon

D Director

F File daemon

The second character representing the severity or level can be:

A Abort

F Fatal

E Error

W Warning

S Security

I Info

D Debug

O OK (i.e. operation completed normally)

So in the example above [SF0001] indicates it is a message id, because of the brackets and because it is at the beginning of the message, and that it was generated by the Storage daemon as a fatal error.

As mentioned above it will take some time to implement these message ids everywhere, and over time we may add more component letters and more severity levels as needed.

New Features in 7.4.3

RunScripts

There are two new RunScript short cut directives implemented in the Director. They are:

Job {

...

ConsoleRunBeforeJob = "console-command"

...

}

Job {

...

ConsoleRunAfterJob = "console-command"

...

}

As with other RunScript commands, you may have multiple copies of either the ConsoleRunBeforeJob or the ConsoleRunAfterJob in the same Job resource definition.

Please note that not all console commands are permitted, and that if you run a console command that requires a response, the results are not determined (i.e. it will probably fail).

New Features in 7.4.0

Verify Volume Data

It is now possible to have a Verify Job configured with level=Data to reread all records from a job and optionally check the size and the checksum of all files.

# Verify Job definition

Job {

Name = VerifyData

Level = Data

Client = 127.0.0.1-fd # Use local file daemon

FileSet = Dummy # Will be adapted during the job

Storage = File # Should be the right one

Messages = Standard

Pool = Default

}

# Backup Job definition

Job {

Name = MyBackupJob

Type = Backup

Client = windows1

FileSet = MyFileSet

Pool = 1Month

Storage = File

}

FileSet {

Name = MyFileSet

Include {

Options {

Verify = s5

Signature = MD5

}

File = /

}

To run the Verify job, it is possible to use the ``jobid’’ parameter of the ``run’’ command.

*run job=VerifyData jobid=10

Run Verify Job

JobName: VerifyData

Level: Data

Client: 127.0.0.1-fd

FileSet: Dummy

Pool: Default (From Job resource)

Storage: File (From Job resource)

Verify Job: MyBackupJob.2015-11-11_09.41.55_03

Verify List: /opt/bacula/working/working/VerifyVol.bsr

When: 2015-11-11 09:47:38

Priority: 10

OK to run? (yes/mod/no): yes

Job queued. JobId=14

...

11-Nov 09:46 my-dir JobId 13: Bacula 7.4.0 (13Nov15):

Build OS: x86_64-unknown-linux-gnu archlinux

JobId: 14

Job: VerifyData.2015-11-11_09.46.29_03

FileSet: MyFileSet

Verify Level: Data

Client: 127.0.0.1-fd

Verify JobId: 10

Verify Job:q

Start time: 11-Nov-2015 09:46:31

End time: 11-Nov-2015 09:46:32

Files Expected: 1,116

Files Examined: 1,116

Non-fatal FD errors: 0

SD Errors: 0

FD termination status: Verify differences

SD termination status: OK

Termination: Verify Differences

The current Verify Data implementation requires specifying the correct Storage resource in the Verify job. The Storage resource can be changed with the bconsole command line and with the menu.

Bconsole ``list jobs’’ command options

The list jobs bconsole command now accepts new command line options:

joberrors Display jobs with JobErrors

jobstatus=T Display jobs with the specified status code

client=cli Display jobs for a specified client

order=asc/desc Change the output format of the job list. The jobs are sorted by start time and JobId, the sort can use ascendant (asc) or descendant (desc) (default) value.

Minor Enhancements

New Bconsole “Tee All” Command

The ``@tall’’ command allows logging all input/output from a console session.

*@tall /tmp/log

*st dir

...

Windows Encrypted File System (EFS) Support

The Bacula Enterprise Windows File Daemon for the community version 7.4.0 now automatically supports files and directories that are encrypted on Windows filesystem.

SSL Connections to MySQL

There are five new Directives for the Catalog resource in the bacula-dir.conf file that you can use to encrypt the communications between Bacula and MySQL for additional security.

- dbsslkey

takes a string variable that specifies the filename of an SSL key file.

- dbsslcert

takes a string variable that specifies the filename of an SSL certificate file.

- dbsslca

takes a string variable that specifies the filename of a SSL CA (certificate authority) certificate.

- dbsslcipher

takes a string variable that specifies the cipher to be used.

Max Virtual Full Interval

This is a new Job resource directive that specifies the time in seconds that is a maximum time between Virtual Full jobs. It is much like the Max Full Interval directive but applies to Virtual Full jobs rather that Full jobs.

New List Volumes Output

The list and llist commands have been modified so that when listing Volumes a new pseudo field expiresin will be printed. This field is the number of seconds in which the retention period will expire. If the retention period has already expired the value will be zero. Any non-zero value means that the retention period is still in effect.

An example with many columns shorted for display purpose is:

*list volumes

Pool: Default

*list volumes

Pool: Default

+----+---------------+-----------+---------+-------------+-----------+

| id | volumename | volstatus | enabled | volbytes | expiresin |

+----+---------------+-----------+---------+-------------+-----------+

| 1 | TestVolume001 | Full | 1 | 249,940,696 | 0 |

| 2 | TestVolume002 | Full | 1 | 249,961,704 | 1 |

| 3 | TestVolume003 | Full | 1 | 249,961,704 | 2 |

| 4 | TestVolume004 | Append | 1 | 127,367,896 | 3 |

+----+---------------+-----------+---------+-------------+-----------+

New Features in 7.2.0

New Job Edit Codes %E %R

In various places such as RunScripts, you have now access to %E to get the number of non-fatal errors for the current Job and %R to get the number of bytes read from disk or from the network during a job.

Enable/Disable commands

The bconsole enable and disable commands have been extended from enabling/disabling Jobs to include Clients, Schedule, and Storage devices. Examples:

disable Job=NightlyBackup Client=Windows-fd

will disable the Job named NightlyBackup as well as the client named Windows-fd.

disable Storage=LTO-changer Drive=1

will disable the first drive in the autochanger named LTO-changer.

Please note that doing a reload command will set any values changed by the enable/disable commands back to the values in the bacula-dir.conf file.

The Client and Schedule resources in the bacula-dir.conf file now permit the directive Enable = yes or Enable = no.

Snapshot Management

Bacula 7.2 is now able to handle Snapshots on Linux/Unix systems. Snapshots can be automatically created and used to backup files. It is also possible to manage Snapshots from Bacula’s bconsole tool through a unique interface.

Snapshot Backends

The following Snapshot backends are supported with Bacula Enterprise 8.2:

BTRFS

ZFS

LVM

By default, Snapshots are mounted (or directly available) under .snapshots directory on the root filesystem (On ZFS, the default is .zfs/snapshots).

The Snapshot backend program is called bsnapshot and is available in the bacula-enterprise-snapshot package. In order to use the Snapshot Management feature, the package must be installed on the Client.

The bsnapshot program can be configured using /opt/bacula/etc/bsnapshot.conf file. The following parameters can be adjusted in the configuration file:

trace=<file> Specify a trace file

debug=<num> Specify a debug level

sudo=<yes/no> Use sudo to run commands

disabled=<yes/no> Disable snapshot support

retry=<num> Configure the number of retries for some operations

snapshot_dir=<dirname> Use a custom name for the Snapshot directory. (.SNAPSHOT, .snapdir, etc…)

lvm_snapshot_size=<lvpath:size> Specify a custom snapshot size for a given LVM volume

# cat /opt/bacula/etc/bsnapshot.conf

trace=/tmp/snap.log

debug=10

lvm_snapshot_size=/dev/ubuntu-vg/root:5%

Application Quiescing

When using Snapshots, it is very important to quiesce applications that are running on the system. The simplest way to quiesce an application is to stop it. Usually, taking the Snapshot is very fast, and the downtime is only about a couple of seconds. If downtime is not possible and/or the application provides a way to quiesce, a more advanced script can be used. An example is described on SnapRunScriptExample.

New Director Directives

The use of the Snapshot Engine on the FileDaemon is determined by the new Enable Snapshot FileSet directive. The default is no.

FileSet {

Name = LinuxHome

Enable Snapshot = yes

Include {

Options = { Compression = LZO }

File = /home

}

}