New Features in Bacula Enterprise

This chapter presents the new features that have been added to the previous versions of Bacula Enterprise. These features are only available with a subscription from Bacula Systems.

Bacula Enterprise 14

Microsoft 365 Plugin Enhancements

The Microsoft 365 Plugin has received a number of new features to complete the coverage of the most important Microsoft 365 service modules. It also gained enhancements to existing modules that will improve the user experience and allow better control of the data to backup in order to offer the highest level of privacy with respect to the data being being backed up.

More details on any of the following subsections can be found in the Microsoft 365 Plugin documentation.

Teams Module

The teams module adds support to backup and restore Microsoft Teams, including:

Team Entity

Team Settings

Team Members and Roles

Team Installed Apps

Team Channels

Team Channel Tabs

Team Channel Messages and Replies

Team Channel Attatchments

Team Channel Hosted Contents

As in any other M365 Plugin module, it is possible to restore the data locally to the File Daemon or natively into the M365 service as the original team or as a new one.

In order to enable Teams module in a M365 Plugin backup it is necessary to

include the service teams in the Filesets Plugin line, and select the team name

using groups directives.

FileSet {

Name = my-teams-fs

Include {

Options {

...

}

Plugin = "m365: tenant=xxy-xx-xx objectid=yyy-yyy service=teams group=MyTeam"

}

}

Chat Module

The Chat module adds support to backup and restore Microsoft Chats, including:

Chat entity

Chat settings

Chat installed apps

Chat tabs

Chat messages and replies

Chat hosted contents

As with any other M365 Plugin module it is possible to restore the data locally to the File Daemon or natively into the M365 service as a new Chat.

In order to enable Chats module in a M365 Plugin backup it is necessary to

include the service chats in the fileset, and select the associated

entity you want to back up (user(s) and/or group(s). If no entity is

used, all chats will be included.

FileSet {

Name = my-chats-fs

Include {

Options {

...

}

Plugin = "m365: tenant=xxxy-x-xx objectid=yy-yy-yyy-yy service=chats"

}

}

Email Indexing

The email backup module has been improved in order to offer the possibility to filter and query the data stored during backups performed with it. The information is now indexed in specific tables of the catalog where details about emails and attachments are stored.

The layout of these tables is shown below (syntax shown is based on PostgreSQL):

CREATE TABLE MetaEmail

(

EmailTenant text,

EmailOwner text,

EmailId text,

EmailTime timestamp without time zone,

EmailTags text,

EmailSubject text,

EmailFolderName text,

EmailFrom text,

EmailTo text,

EmailCc text,

EmailInternetMessageId text,

EmailBodyPreview text,

EmailImportance text,

EmailConversationId text,

EmailIsRead smallint,

EmailIsDraft smallint,

EmailHasAttachment smallint,

EmailSize integer,

Plugin text,

FileIndex int,

JobId int

);

CREATE TABLE MetaAttachment

(

AttachmentTenant text,

AttachmentOwner text,

AttachmentName text,

AttachmentEmailId text,

AttachmentContentType text,

AttachmentIsInline smallint,

AttachmentSize int,

Plugin text,

FileIndex int,

JobId int

);

A collection of associated new Catalog indexes is also included.

It is possible to use the information of these tables with regular

SQL mechanisms (directly from the database engine or through bconsole

SQL commands). However, it is also possible to use a new bconsole .jlist

command which will use metadata as in the examples below:

#List all emails:

.jlist metadata type=email tenant="tenantname.microsoftonline.com" owner="test@localhost"

# Sample output:

[{"jobid": 1,"fileindex": 2160,"emailtenant": "tenantname.microsoftonline.com","emailowner"...

# Get emails with attachments

.jlist metadata type=email tenant="xxx.." owner="test@localhost" hasattachment=1

# Will search in bodypreview

.jlist metadata type=email tenant="xxx..." owner="test@localhost" bodypreview=veronica

# Will search specific fields

.jlist metadata type=email tenant="xxx..." owner="tes..." from=eric to=john subject=regress bodypreview=regards

# Will search for all text fields for "eric", return the next page of 512 elements, order by time desc

.jlist metadata type=email tenant="xxx..." owner="tes..." all=eric orderby=time order=desc limit=512 offset=512

# Will search in all text fields for "eric iaculis"

.jlist metadata type=email all="eric iaculis"

# Will search all text fields and apply some filters about the time, the size read flag and has attachment

.jlist metadata type=email tenant="xxx..." owner="tes..." all="spam" isread=1 hasttachment=1 mintime="2021-09-23 00:00:00" maxtime="2022-09-23 00:00:00" minsize=100 maxsize=100000

.jlist metadata type=attachment tenant="xxx..." owner="tes..." name="cv.pdf" id=xxxxxxxxxxx minsize=100 maxsize=100000

BWeb Management Console Wizard

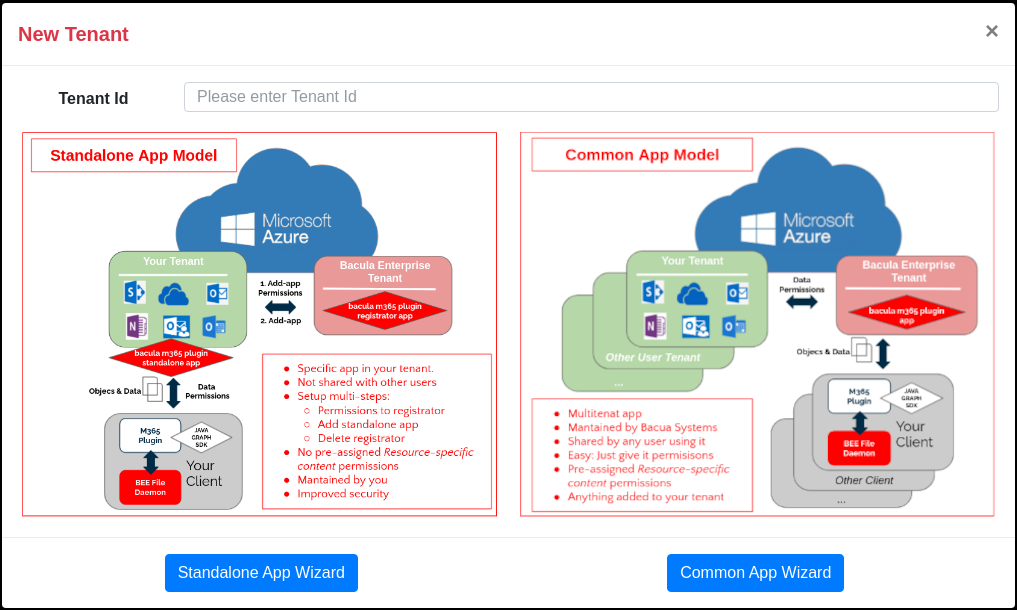

A completely new BWeb Management Dashboard is included to significantly simplify the following actions associated with Microsoft 365 Plugin backups:

Connect with a new tenant

Easily connect using either the ’common’ or the ’standalone’ mode

List and manage configured tenants in each File Daemon

List and manage logged-in users for delegated authentication features

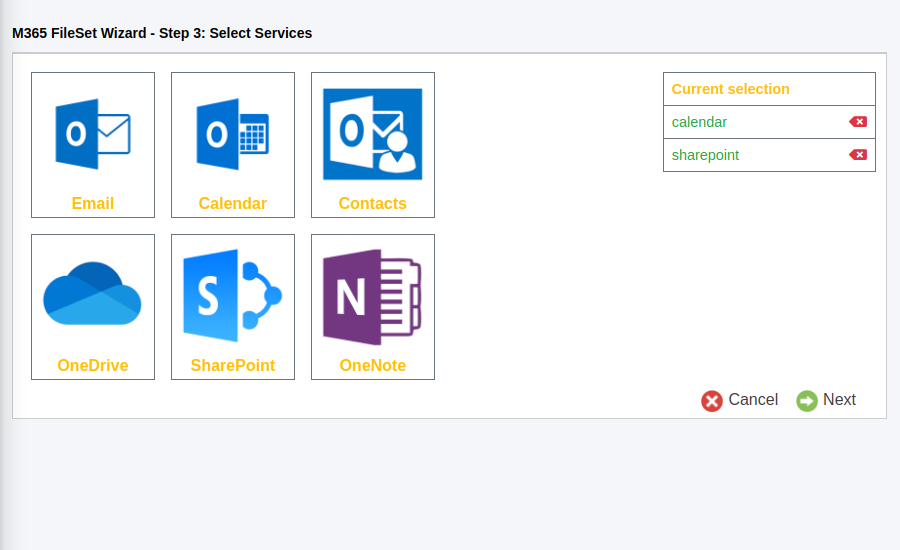

Wizard to add new Microsoft 365 Plugin fileset

Some example screenshots are provided below:

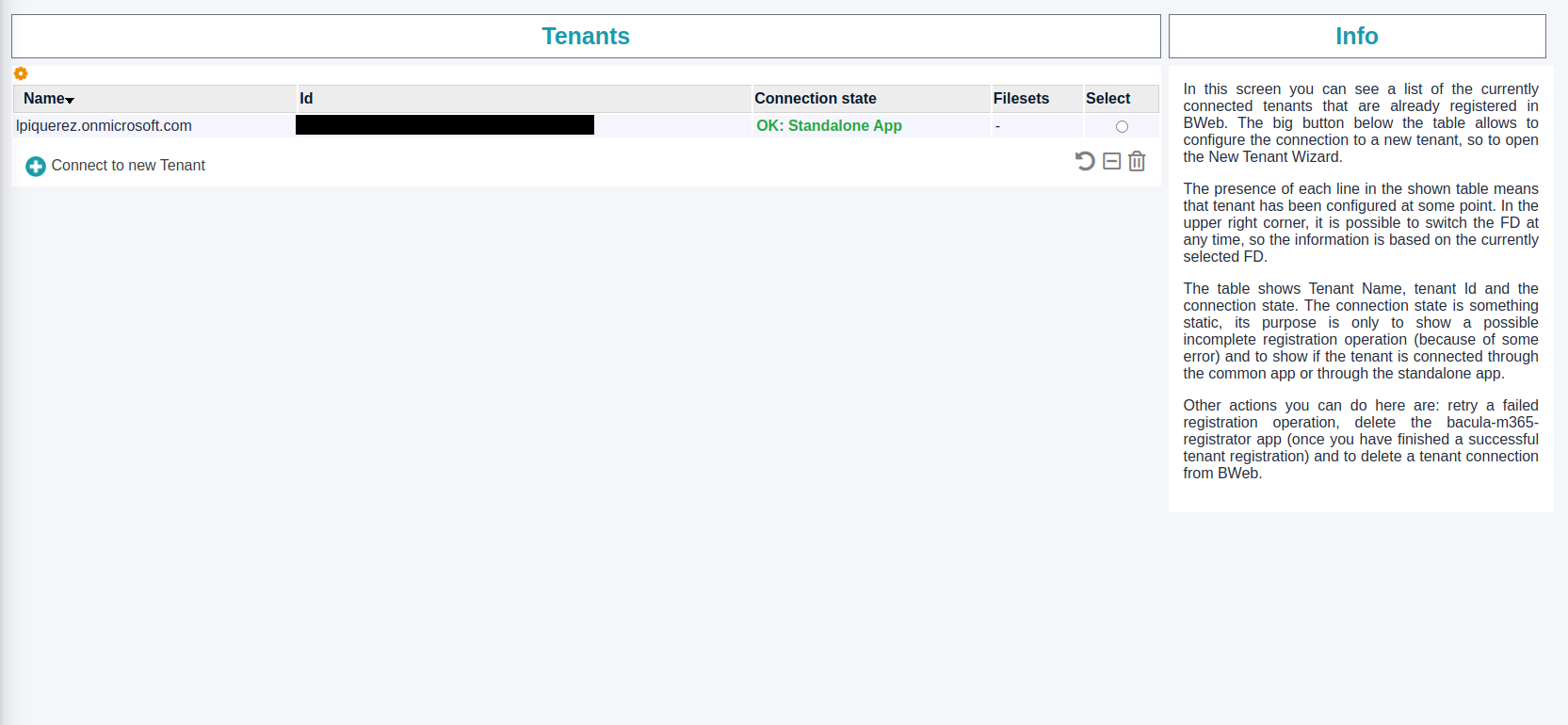

BWeb M365 Management Console: Tenant List

BWeb M365 Management Console: Model Selection

BWeb M365 Management Console: Services

Advanced Email Privacy Filters

Bacula Systems is aware of the many privacy concerns that can arise when tools like our Microsoft 365 Plugin enables the possibility to backup and restore data coming from large numbers of different users. The backup administrator can restore potentially private data at will. Moreover, emails are often one of the most sensitive items in terms of privacy and security to an organization.

One of many strategies this plugin offers in order to mitigate this problem is the possibility to exclude messages. This is a very powerful feature where one can use flexible expressions that allow to:

select a subset of messages and simply exclude them from the backup with the

email_messages_exclude_exprnew fileset parameteror only from the index (from the catalog) with

email_messages_exclude_index_exprnew fileset parameternot only exclude message but also select only a subset of email fields to be included in the protected information. We can exclude fields from the backup with the

email_fields_excludenew fileset parameteror only exclude from the index (from the catalog) with

email_fields_exclude_indexnew fileset parameterExclude those fields directly as a comma separated list in

email_fields_excludeparameter and alsoemail_fields_exclude_indexparameter.

Then, for email_messages_exclude_expr and

email_messages_exclude_index_expr we need to use a valid boolean

expression represented in the Javascript language, using those

fields. Some examples are provided below:

# Expression to exclude messages where subject includes the word 'private'

emailSubject.includes('private')

# Complex expression to exclude messages that are not read and are Draft or their folder name is named Private

!emailIsRead && (emailIsDraft || emailFolderName == 'Private')

# Expression to exclude messages based on the received or sent date

!emailTime < Date.parse('2012-11-01')

# Expression to exclude messages using a regex based on emailFrom

/.*private.com/.test(emailFrom)

An expression tester command is now included as a new query command, where it’s possible to validate the behavior of different expressions against a static, predefined set of data. Check the Micorsoft 365 Plugin Whitepaper in order to find more details.

Data Owner Restore Protection

A second solution to enhance the privacy of the data managed by cloud plugins like the Microsoft 365 Plugin is presented in this section.

The Data Owner Restore Protection feature is enabled at

configuration time with the parameter owner_restore_protection

(please check the Microsoft 365 Plugin documentation for further information).

Once it is enabled, any restore operation will request the intervention

of the owner of the data. The restore job will be paused and will

show a message in the Job log asking to access a Microsoft 365 page

and enter a security code. This same information will be also sent by email

to the affected user.

If the user does not complete the operation (in 15 minutes) the restore will fail and no data will be restored. However, if the user knows of the operation and wishes to aprove it, as soon as they complete the login process, the restore will resume and the data will be processed and restored to the configured destination.

TOTP Console Authentication Plugin

The TOTP (Time based One Time Password) Authentication Plugin is compatible with RFC 6238. Many smartphone applications are available to store the keys and compute the TOTP code.

The standard password, possibly TLS-authentication and encryption are still used to accept an incoming console connection. Once accepted, the Console will prompt for a second level of authentication with a TOTP secret key generated from a shared token.

To enable this feature, you needed to install the

bacula-enterprise-totp-dir-plugin package on your Director system, then

to set the PluginDirectory directive of the Director resource

and configure the AuthenticationPlugin directive of a given

restricted Console in the Director configuration file.

# in bacula-dir.conf

Director {

Name = myname-dir

...

Plugin Directory = /opt/bacula/plugins

}

Console {

Name = "totpconsole"

Password = "xxx"

Authentication Plugin = "totp"

}

The matching Console configuration in bconsole.conf has no extra

settings compared to a standard restricted Console.

# in bconsole.conf

Console {

Name = totpconsole

Password = "xxx" # Same as in bacula-dir.conf/Console

}

Director {

Name = mydir-dir

Address = localhost

Password = notused

}

At the first console connection, if the TLS link is correctly setup (using the shared secret key), the plugin will generate a specific random key for the console and display a QR code in the console output. The user must then scan the QR code with his smartphone using an app such as Aegis (Opensource) or Google Authenticator. The plugin can also be configured to send the QR code via an external program.

Note

The program qrencode (>=4.0) is used to convert the otpauth URL to a QR code.

If the program is not installed the QR code can’t be displayed.

More information can be found in Console Multi-Factor Authentication Plugins

To use the TOTP Authentication plugin with BWeb Management Console, it is required to perform the following steps:

Create a system user via the

addusercommand namedadminAssign a password via

passwdcommandActivate the

securityoption and thesystem_authentificationparameter in the BWeb Management Console / Configuration / BWeb Configuration pageLogin with the

adminuser and the password defined earlier

For each user that needs to be added

Access the User administration page in BWeb Management Console / Configuration / Manage Users

Add a user

usernamewith theTOTP Authenticationoption of the Authentication parameterCreate a TOTP authentication key on the command line with the

baculaaccount withbtotp -c -n bweb:usernameThe

bweb:prefix is a requirement to distinguish between different login targets, namelybconsolewithout a prefix and BWeb with this one. The username can be freely chosen.Tip

If the

btotpcommand to create the secret is not run under the account the web server runs as, permissions and ownership to the generated file in the TOTP key storage directory will have to be modified:[root@ ~]# ls -al /opt/bacula/etc/conf.d/totp/ total 8 drwx------. 2 bacula bacula 53 9. Mar 14:39 . drwx------. 10 bacula bacula 128 9. Mar 06:55 .. -rw-------. 1 bacula bacula 31 9. Mar 06:55 KNSWG5LSMU [root@ ~]# /opt/bacula/bin/btotp -c -n bweb:Newuser /opt/bacula/etc/conf.d/totp//MJ3WKYR2JZSXO33VONSXE [root@ ~]# ls -al /opt/bacula/etc/conf.d/totp/ total 12 drwx------. 2 bacula bacula 82 10. Mar 04:58 . drwx------. 10 bacula bacula 128 9. Mar 06:55 .. -rw-------. 1 bacula bacula 31 9. Mar 06:55 KNSWG5LSMU -rw-------. 1 root root 31 10. Mar 04:58 MJ3WKYR2JZSXO33VONSXE [root@ ~]# chown bacula. /opt/bacula/etc/conf.d/totp/MJ3WKYR2JZSXO33VONSXE [root@ ~]# ls -al /opt/bacula/etc/conf.d/totp/ total 12 drwx------. 2 bacula bacula 82 10. Mar 04:58 . drwx------. 10 bacula bacula 128 9. Mar 06:55 .. -rw-------. 1 bacula bacula 31 9. Mar 06:55 KNSWG5LSMU -rw-------. 1 bacula disk 31 10. Mar 04:58 MJ3WKYR2JZSXO33VONSXE [root@bsys-demo ~]#

For security reasons, it may be best to set up a dedicated management account with rules for sudo to be able to call the btotp program as a restricted user and have it execute with proper permissions.

Display the TOTP QR Code on the command line with the

baculaaccount withbtotp -q -n bweb:username

Note

The program qrencode (>=4.0) is used to convert the otpauth URL to a QR code.

If the program is not installed the QR code can’t be displayed.

Tip

It is possible to create additional BWeb users with administrative privileges (“Administrator” profile) and “TOTP Authentication” Password Type. Those users will be able to administer all functions of BWeb (and Bacula through it). At this point you could even disable the admin account created using the adduser command, but please note that system_authentication (enable_system_auth) needs to remain set (in the BWeb configuration) in order for the TOTP authentication to remain functional.

FileDaemon Security Enhancements

Restore and Backup Job User

New FileDaemon directives let the File Daemon control in which user’s context Backup and Restore Jobs run. The directive values can be assigned as uid:gid or username:groupname and are applied per configure Director. Backup and Restore jobs will then run as the specified user. If this directive is set for the Restore Job, it overrides the Restore user set with ’jobuser’ and ’jobgroup’ arguments from the ’restore’ command.

# in bacula-fd.conf

Director {

Name = myname-dir

...

BackupJobUser = 1001:1001

RestoreJobUser = restoreuser:restoregroup

}

This facility requires that the running File Daemon can change its user context, and is only available on recent Linux systems with proper capabilities set up.

Allowed Backup and Restore Directories

New FileDaemon directives provide control of which client

directories are allowed to be accessed for backup on a per-director basis. Directives

can be specified as a comma-separated list of directories. Simple

versions of the AllowedBackupDirectories and

ExcludedBackupDirectories directives might look as follows:

# in bacula-fd.conf

Director {

Name = myname-dir

...

AllowedBackupDirectories = "/path/to/allowed/directory"

}

Director {

Name = my-other-dir

...

ExcludedBackupDirectories = "/path/to/excluded/directory"

}

This directive works on the FD side, and is fully independent of the include/exclude part of the Fileset defined in the Director’s config file. Nothing is backed up if none of the files defined in the Fileset is inside FD’s allowed directory.

Allowed Restore Directories

This new directive controls which directories the File Daemon can

use as a restore destination on a per-director basis. The directive

can have a list of directories assigned. A Simple version of the

AllowedRestoreDirectories directive can look like this:

# in bacula-fd.conf

Director {

Name = myname-dir

...

AllowedRestoreDirectories = "/path/to/directory"

}

Allowed Script Directories

This File Daemon configuration directive controls from which directories

the Director can execute client scripts and programs (e.g. using the

Runscript feature or with a File Set’s ’File=’ directive). The directive

can have a list of directories assigned. A simple version of the

AllowedScriptDirectories directive could be

# in bacula-fd.conf

Director {

Name = myname-dir

...

AllowedScriptDirectories = "/path/to/directory"

}

When this directive is set, the File Daemon is also checking programs to be run against a set of forbidden characters.

When the following resource is defined inside the Director’s config file, Bacula won’t back up any file for the Fileset:

FileSet {

Name = "Fileset_1"

Include {

File = "\\|/path/to/binary &"

}

}

This is because of the ’&’ character, which is not allowed when the

Allowed Script Directories is used on the Client side.

This is the full list of disallowed characters:

$ ! ; \ & < > ` ( )

To disable all commands sent by the Director, it is possible to use the following configuration in the File Daemon configuration:

AllowedScriptDirectories = none

Security Plugin

The Bacula Enterprise FileDaemon Security Plugin Framework can be used to produce security reports during Backup jobs. This version of the security plugin is compatible only on Linux/Unix systems and is delivered with a set of rules that can detect potential miss-configuration of the Director and Bacula files and directories.

To enable the plugin, you need to install the

bacula-enterprise-security-plugin package on your Client and

configure the Plugin Directory directive in the FileDaemon

resource.

# in bacula-fd.conf

FileDaemon {

Name = myname-fd

...

Plugin Directory = /opt/bacula/plugins

...

Plugin Options = "security: interval=2days"

}

The plugin will be automatically used once a day and will create a

security report available in the Catalog. If a serious security issue is

detected on the server, a message will be printed in the Job log, and a

security event will be created. To configure the minimum interval, the

PluginOptions directive in the FileDaemon resource can be used. The

security plugin has an interval parameter that can accept a time

duration.

The security report produced by plugin is accessible via the bconsole

list command:

* list restoreobjects jobid=1 objecttype=security

+-------+-----------------+-----------------+------------+------------+--------------+

| jobid | restoreobjectid | objectname | pluginname | objecttype | objectlength |

+-------+-----------------+-----------------+------------+------------+--------------+

| 1 | 1 | security-report | security: | 30 | 696 |

+-------+-----------------+-----------------+------------+------------+--------------+

* list restoreobjects jobid=1 objecttype=security id=1

{"data":[{"source":"bacula-basic","version":1,"error":0,"events":[{"message":"Permissions on ..

Proxmox and QEMU Incremental Backup Plugin

The new QEMU plugin can backup QEMU hypervisors using the QMP transaction feature and dump disks with the QMP API. The QEMU plugin can be used to handle Proxmox QEMU virtual machines for Full and Incremental backup.

More information can be found in the QEMU Plugin user’s guide.

FreeSpace Storage Daemon Policy

Introducing new Storage Group policy, which queries each Storage Daemon in the list for its FreeSpace and sorts the list by the FreeSpace returned, so that first item in the list is the SD with the largest amount of FreeSpace while the last one in the list is the one with the least amount of FreeSpace available. For an Autochanger with many devices, pointing to the same mountpoint, the size of only one single device is taken into consideration for the FreeSpace policy.

Policy can be used in the same manner as the other ones:

Pool {

...

Storage = File1, File2, File3

StorageGroupPolicy = FreeSpace

...

}

Antivirus Plugin

The FileDaemon Antivirus plugin provides integration between the ClamAV Antivirus daemon and Bacula Verify Jobs, allowing post-backup virus detection within Bacula Enterprise.

More information can be found in the Antivirus Plugin user’s guide.

Volume Protection

Warning

This feature is only for file-based devices.

This feature can only be used if Bacula is run as a systemd service

because only then, with proper capabilities set for the daemon, it’s

allowed to manage Volume Files’ attributes.

For File-based volumes Bacula will set the Append Only attribute

during the first backup job that uses the new volume. This will prevent

Volumes losing data by being ovewritten.

The Append Only file attribute is cleared when the volume is being

relabeled.

Bacula is now also able to set the Immutable file attribute on a

file volume which is marked as Full.

When a volume is Full and has the Immutable flag set, it cannot be

relabeled and reused until the expiration period elapses. This helps to

protect volumes from being reused too early, according to the protection

period set.

If Volume’s filesystem does not support the Append only or

Immutable flags, a proper warning message is printed in the job log

and Bacula proceeds with the usual backup workflow.

There are three new directives available to set on a per-device basis to control the the Volume Protection behavior:

- SetVolumeAppendOnly

Determines if Bacula should set the

Append_Onlyattribute when writing on the volume for the first time.- SetVolumeImmutable

Determines if Bacula should set the

Immutableattribute when marking volume as Full.- MinimumVolumeProtectionTime

Specifies how much time has to elapse before Bacula is able to clear the attribute.

Nutanix Filer Plugin

The Nutanix Incremental Accelerator plugin is designed to simplify and optimize the backup and restore performance of your Nutanix NAS hosting a large number of files.

When using the plugin for Incremental backups, Bacula Enterprise will query the Nutanix REST API for a previous backup snapshot then quickly determine a list of all files modified since the last backup instead of having to walk recursively through the entire filesystem. Once Bacula has the backup list, it will use a standard network share NFS or CIFS to access the files.

The Nutanix HFC documentation provides information about this new plugin.

BWeb Management Console Enhancements

The new BWeb Management Console menu organisation has been improved. The Job Administration and the Bacula Configuration parts are now accessible via a single menu. All wizards about common administration tasks are now placed in a main location accessible via a floating button on all pages.

ZStandard FileSet Compression Option

The ZSTD compression algorithm is now available in the FileSet option

directive Compression. It is possible to configure ZSTD level 1

zstd1, level 10 zstd10 and level 19 zstd19. The default

zstd compression is 10.

Call Home Plugin

Note

The Call Home Plugin needs further work on the service side with Bacula System. In consequence, the features described below are not implemented.

The callhome Director plugin can be used to automatically check if

your contract with Bacula Systems is correct and if the system is

compliant with your subscription.

To use this option, the bacula-enterprise-callhome-dir-plugin must be

installed on the Director’s system, the PluginDirectory directive in

the Director resource of the bacula-dir.conf must be set to

/opt/bacula/plugins and the directive CustomerId in the

Director resource must be set to your CustomerId available in the

Welcome package that you have recieved.

Director {

Name = mydir-dir

...

CustomerId = mycustomerid_found_in_the_welcome_package

PluginDirectory = /opt/bacula/plugins

}

At a regular interval, the Director will contact the Bacula Systems

server www.baculasystems.com (94.103.98.75) on the SSL port 443 to check the

contract status based on the CustomerId. Bacula Systems will analyze

and collect some information in this process:

The number of Clients

The number of Jobs in the catalog

The name of the Director

The Bacula version

The Uname of the Director platform

The list of the plugins installed

The size of all Jobs

The size of all volumes

RunScript Enhancements

A new Director RunScript RunsWhen keyword of AtJobCompletion has been implemented, which runs the command after at the end of job and can update the job status if the command fails.

Job {

...

Runscript {

RunsOnClient = no

RunsWhen = AtJobCompletion

Command = "mail command"

AbortJobOnError = yes

}

}

This directive has been added because the RunsWhen keyword After was not

designed to update the job status if the command fails.

Miscellaneous

Amazon Cloud Driver

A new Amazon Cloud Driver is available for beta testing. In the long term, it will enhance and replace the existing S3 cloud driver. The aws tool provided by Amazon is needed to use this cloud driver. The Amazon cloud driver is available within the bacula-enterprise-cloud-storage-s3 package

Bacula Enterprise Installation Manager Enhancements

Swift Plugin Keystone v3 Authentication Support

Metadata Catalog Support

Plugins List in the Catalog

The list of the installed Plugins is now stored in the

Clientcatalog table.JSON Output

The console has been improved to support a JSON output to list catalog objects and various daemon output. The new “.jlist” command is a shortcut of the standard “list” command and will display the results in a JSON table. All options and filters of the “list” command can be used in the “.jlist” command. Only catalog objects are listed with the “list” or “.jlist” commands. Resources such as Schedule, FileSets, etc… are not handled by the “list” command.

See the “help list” bconsole output for more information about the “list” command. The Bacula configuration can be displayed in JSON format with the standard “bdirjson”, “bsdjson”, “bfdjson” and “bbconsjson” tools.

*.jlist jobs

{"type": "jobs", "data":[{"jobid": 1,"job": "CopyJobSave.2021-10-04_18.35.55_03",...

*.api 2 api_opts=j

*.status dir header

{"header":{"name":"127.0.0.1-dir","version":"12.8.2 (09 September 2021)"...

Bacula Enterprise 12.8.2

New Accurate Option to Save Only File’s Metadata

The new ’o’ Accurate directive option for a Fileset has been added to save only the metadata of a file when possible.

The new ’o’ option should be used in conjunction with one of the signature checking options (1, 2, 3, or 5). When the ’o’ option is specified, the signature is computed only for files that have one of the other accurate options specified triggering a backup of the file (for example an inode change, a permission change, etc…).

In cases where only the file’s metadata has changed (ie: the signature is identical), only the file’s attributes will be backed up. If the file’s data has been changed (hence a different signature), the file will be backed up in the usual way (attributes as well as the file’s contents will be saved on the volume).

For example:

Job {

Name = JobTest

JobDefs = DefaultJob

FileSet = TestFS

Accurate = yes

}

FileSet {

Name = TestFS

Options {

Signature = MD5

Accurate = pino5

}

File = /data

}

The backup job will will compare permission bits, inodes, number of links and if any of it changes it will also compute file’s signature to verify if only the metadata must be backed up or if the full file must be saved.

Bacula Enterprise 12.8.0

Microsoft 365 Plugin

Microsoft 365 is a cloud-based software solution offered by Microsoft as a service. It is intended to be used by customers who want to externalize their businesses services like email, collaboration, video conferencing, file sharing, and others.

The Bacula Systems M365 Plugin is designed to handle the following pieces of the Microsoft 365 galaxy:

Granular Exchange Online Mailboxes (BETA [1]_)

OneDrive for Business and Sharepoint Document libraries

Sharepoint Sites

Contacts/People

Calendars

Events

The Plugin has many advanced features, including:

Microsoft Graph API based backups

Multi-service backup in the same task

Multi-service parallelization capabilities

Multi-thread single service processes

Generation of user-friendly report for restore operations

Network resiliency mechanisms

Latest Microsoft Authentication mechanisms

Discovery/List/Query capabilities

Restore objects to Microsoft 365 (to original entity or to any other entity)

Restore any object to filesystem

Incremental and Differential backup level

…

Please see the Bacula Enterprise M365 Plugin whitepaper for more information.

Storage Group

It is now possible to access more than one Storage resource for each Job/Pool. Storage can be specified as a comma separated list of Storage resources to use.

Along with specifying a storage list it is now possible to specify Storage Group Policy which will be used for accessing the list elements. If no policy is specified, Bacula always tries to take first available Storage from the list. If the first few storage daemons are unavailable due to network problems; broken or unreachable for some other reason, Bacula will take the first one from the list (sorted according to the policy used) which is network reachable and healthy.

Currently supported policies are:

- ListedOrder - This is the default policy, which uses first available storage from

the list provided

- LeastUsed - This policy scans all storage daemons from the list and chooses the

one with the least number of jobs being currently run

Storage Groups can be used as follows (as a part of Job and Pool resources):

Job {

...

Storage = File1, File2, File3

...

}

Pool {

...

Storage = File4, File5, File6

StorageGroupPolicy = LeastUsed

...

}

When a Job or Pool with Storage Group is used, the user can observe some messages related to the choice of Storage such as:

messages

31-maj 19:23 VBox-dir JobId 1: Start Backup JobId 1, Job=StoreGroupJob.2021-05-31_19.23.36_03

31-maj 19:23 VBox-dir JobId 1: Possible storage choices: "File1, File2"

31-maj 19:23 VBox-dir JobId 1: Storage daemon "File1" didn’t accept Device "FileChgr1-Dev1" becaus

31-maj 19:23 VBox-dir JobId 1: Selected storage: File2, device: FileChgr2-Dev1, StorageGroupPolic

Hyper-V VSS Single Item Restore

It is now possible to restore individual files from Hyper-V VSS Virtual Machine backups. The Hyper-V Single File Restore whitepaper provides information about it.

New Hyper-V Plugin

Hyper-V implements a VSS writer on all versions of Windows Server where Hyper-V is supported. This VSS writer allows developers to utilize the existing VSS infrastructure to backup virtual machines to Bacula using the Bacula Enterprise VSS Plugins.

Starting in Windows Server 2016, Hyper-V also supports backup through the Hyper-V WMI API. This approach still utilizes VSS inside the virtual machine for backup purposes, but no longer uses VSS in the host operating system. It allows individual Guest VMs to be backuped up separately and incrementally. This approach is more scalable than using VSS in the host, however it is only available on Windows Server 2016 and later. The new Bacula Enterprise Hyper-V Plugin “hv” uses this technology for backup and restore to/from Bacula.

The Microsoft Hyper-V whitepaper provides more information.

VMWare vSphere Plugin Enhancements

vSphere Permissions

The vsphere-ctl command can now check the permissions of the current

user on the vCenter system and diagnose issues if any are detected.

/opt/bacula/bin/vsphere-ctl query list_missing_permissions

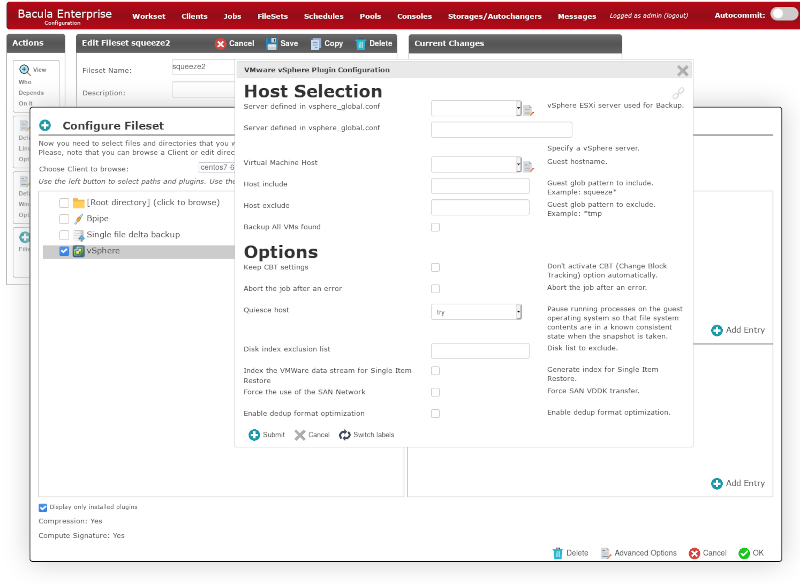

Configuration

It is now possible to manage the vsphere_global.conf parameter file

with the vsphere-ctl config * command.

vsphere-ctl config create - creates an entry inside vsphere_global.conf

vsphere-ctl config delete - deletes an entry inside vsphere_global.conf

vsphere-ctl config list - lists all entries inside vsphere_global.conf

/opt/bacula/bin/vsphere-ctl config create

[root@localhost bin]# ./vsphere-ctl config create

Enter url: 192.168.0.15

Enter user: administrator@vsphere.local

Enter password:

Connecting to "https://192.168.0.15/sdk"...

OK: successful connection

Select an ESXi host to backup:

1) 192.168.0.8

2) 192.168.0.26

Select host: 1

Computing thumbprint of host "192.168.0.8"

OK: thumbprint for "192.168.0.8" is 04:24:24:13:3C:AD:63:84:A1:9F:E5:14:82

OK: added entry [192_168_0_8] to ../etc/vsphere_global.conf

Instant Recovery

The VMWare Instant Recovery feature has been enhanced to handle errors during migration and the NFS Datastore creation more effectively. The cleanup procedure has also been reviewed.

Backup and Restore

During the Backup or the Restore process, the thumbprint of the target ESXi Host system is now verified and a clear message is printed if the expected thumbprint is incorrect.

Restore

The support for SATA Disk controllers has been added.

BWeb Management Suite

Event Dashboard

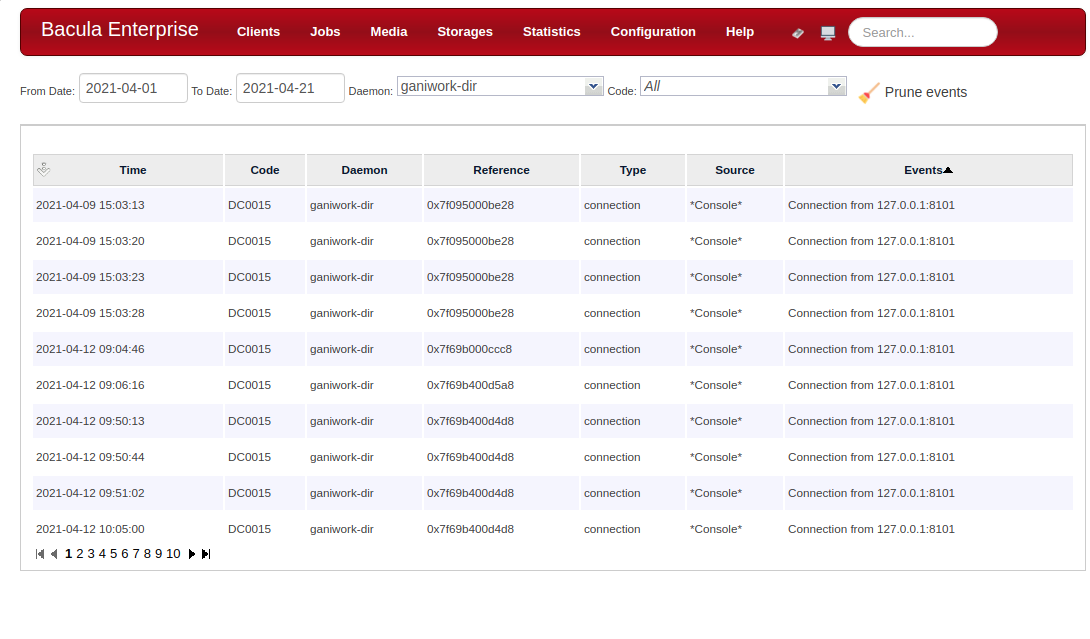

BWeb Management Suite has a new dashboard to browse Bacula events stored in the Bacula Catalog (see Event and Auditing).

OpenShift Plugin

The Bacula Enterprise OpenShift Plugin is now certified and available directly from the Red Hat OpenShift system.

Please see the OpenShift whitepaper for more information.

Bacula Enterprise Ansible Collection

Ansible Collections are a new and flexible standard to distribute content like playbooks and roles. This new format helps to easily distribute and automate your environment. These pre-packaged collections can also be modified to meet the needs of your environment, especially by using templates and variables.

Our Bacula Enterprise Ansible Collection will help you to easily deploy Directors, Clients, and Storages in your environment. Since Bacula Enterprise version 12.6.4, a new option was introduced to the BWeb configuration split script to allow the configuration to be “re-split” when deploying new resources with the Bacula Enterprise Ansible Collection playbooks.

Our collection will create configuration files that can be integrated to your current BWeb configuration by using the tests/re-split-configuration.yml playbook provided in the collection. This is useful to know and use when BWeb is being used to manage your Bacula Enterprise environment.

We strongly recommend to use the BWeb configuration split script if you use Bacula Enterprise Ansible Collection to deploy new Clients and Storages and your Bacula Enterprise environment uses BWeb to manage configuration files, because it will check if all the resources being added to the current BWeb structure are correctly defined.

Bacula Enterprise plugins can also be deployed using the Ansible Collection. Please adapt the templates provided to take advantage of the specific configuration needs of your environment.

More information about Ansible Galaxy Collections may be found in a blog post called “Getting Started With Ansible Content Collections” available on the official Ansible website here: https://www.ansible.com/blog/getting-started-with-ansible-collections

The Bacula Enterprise Ansible Collection is publicly available in Ansible Galaxy:

Misc

Plugin Object Status Support

The Object table has now a ObjectStatus field that can be used by plugins to report more precise information about the backup of an Object (generated by Plugins).

SAP HANA 1.50 Support

The Bacula Enterprise SAP HANA Plugin is now certified with the SAP HANA 1.50 protocol version (SAP HANA 2 SP5).

Network Buffer Management

The new SDPacketCheck FileDaemon directive can be used to control

the network flow in some specific use cases.

See SDPacketCheck directive in the client configuration for more information.

IBM Lintape Driver (BETA)

The new Use Lintape Storage Daemon directive has been added to

support the Lintape Kernel driver.

See Use LinTape directive in the Storage Daemon Device{} resource for more information.

Bacula Enterprise 12.6.0

VMware Instant Recovery

It is now possible to recover a vSphere Virtual Machine in a matter of minutes by running it directly from a Bacula Volume.

Any changes made to the Virtual Machine’s disks are virtual and temporary. This means that the disks remain in a read-only state. The users may write to the Virtual Machine’s disks without the fear of corrupting their backups. Once the Virtual Machine is started, it is then possible via VMotion to migrate the temporary Virtual Machine to a production datastore.

Please see the Single Item Restore whitepaper and the vSphere Plugin whitepaper for more information.

New Features in BWeb Management Suite

New FileSet Editing Window

A new FileSet editing window is available. It is now possible to configure the different plugins with dynamic controls within BWeb.

Tag Support

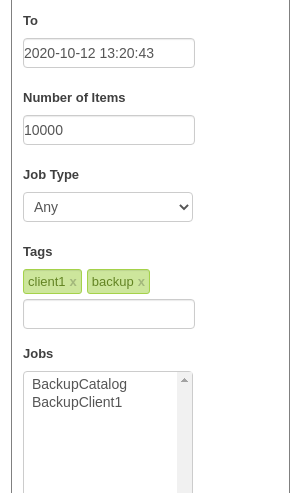

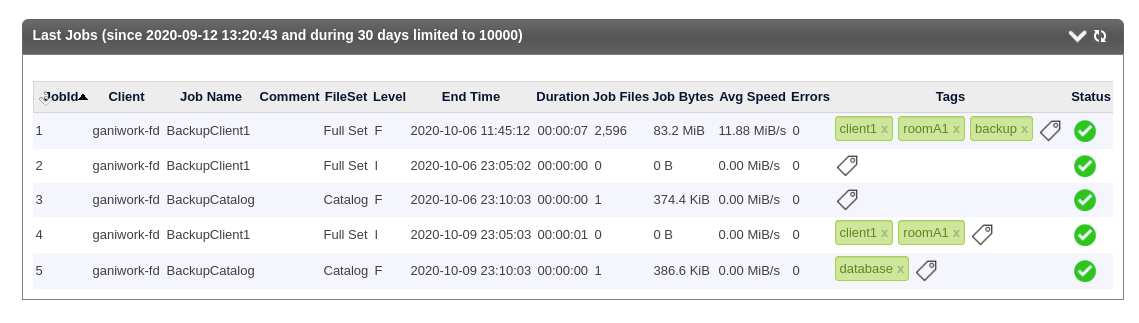

BWeb has now a support for user’s tags. It is possible to assign tags to various catalog records (such as Jobs, Clients, Objects, Volumes).

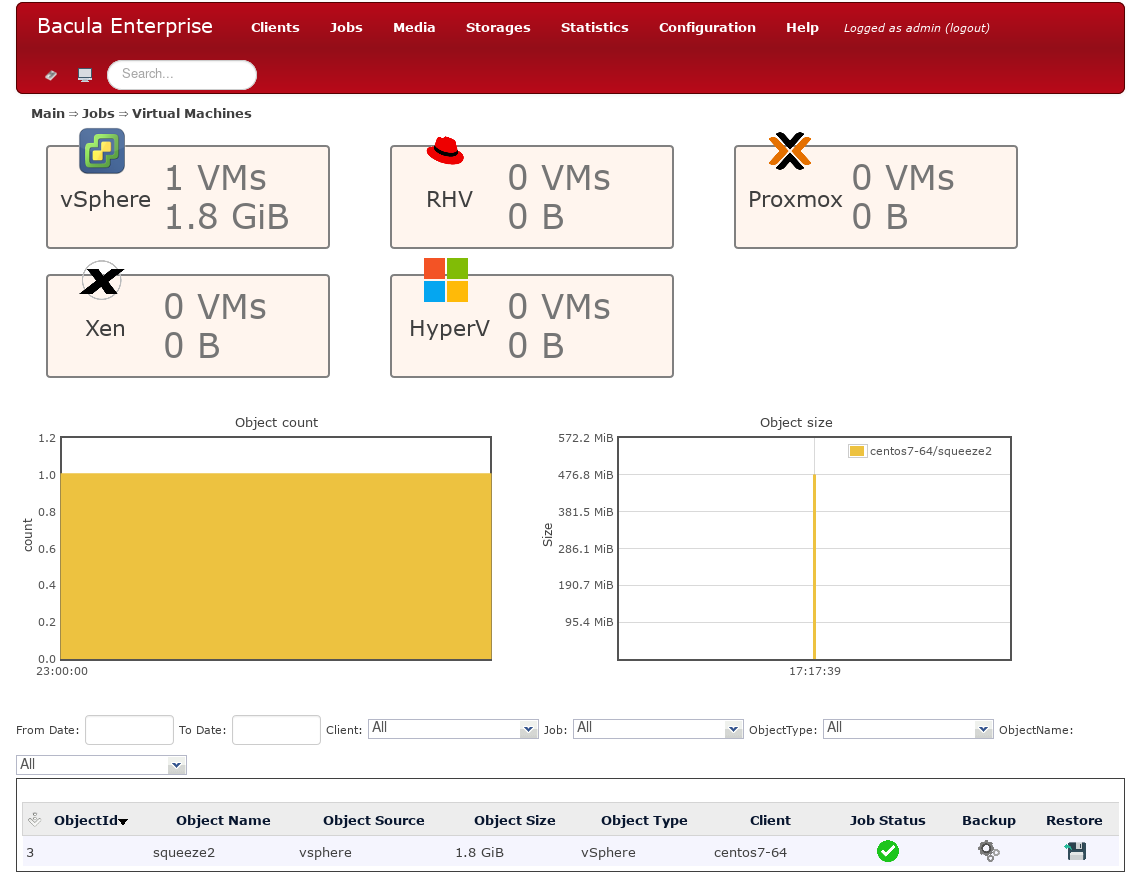

Virtual Machine Dashboard

A new Virtual Machine dashboard is available in

Job / Virtual Machines menu. From this dashboard, all Virtual

Machines are listed and it is possible to directly backup or restore one

of them from this new interface.

VSS Plugin Enhancements

The VSS Plugin has been improved to detect automatically the volumes to

include in the Snapshot Set depending on the Writers and the Components

that are included/excluded during the Backup job. The alldrives

plugin or the use of a dummy file is no longer needed.

See the VSS whitepaper for more information.

Hyper-V Cluster Support

The new VSS Plugin supports the Hyper-V Cluster mode using Cluster Shared Volumes (CSV).

See the VSS whitepaper for more information.

Windows Cluster Volume Support

The Bacula FileDaemon now supports the Cluster Shared Volumes (CSV) natively. Note that due to a Microsoft restriction with the Snapshot Sets, it is not possible to mix standard volumes with CSV volumes within a single Job.

External LDAP Console Authentication

The new Bacula Plugable Authentication Module (BPAM) API framework introduced in Bacula Enterprise 12.6 comes with the first plugin which handles user authentication against any LDAP Directory Server (including OpenLDAP and Active Directory).

# bconsole

*status director

...

Plugin: ldap-dir.so

...

When the LDAP plugin is loaded you can configure a named console

resource to use LDAP to authenticate users. BConsole will prompt for a

User and Password and it will be verified by the Director. TLS PSK

(activated by default) is recommended. To use this plugin, you have to

specify the PluginDirectory Director resource directive, and add a

new console resource directive Authentication Plugin as shown below:

Director {

...

Plugin Directory = /opt/bacula/plugins

}

Console {

Name = "ldapconsole"

Password = "xxx"

# New directive

Authentication Plugin = "ldap:<parameters>"

...

}

where parameters are the space separated list of one or more plugin

parameters:

url - LDAP Directory service location, i.e. “url=ldap://10.0.0.1/”

binddn - DN used to connect to LDAP Directory service to perform required query

bindpass - DN password used to connect to LDAP Directory service to perform required query

query - A query performed on LDAP Directory serice to find user for authentication. The query string is composed as <basedn>/<filter>. Where ‘<basedn> is a DN search starting point and <filter> is a standard LDAP search object filter which support dynamic string substitution: %u will be replaced by credential’s username and %p by credential’s password, i.e. query=dc=bacula,dc=com/(cn=%u).

starttls - Instruct the BPAM LDAP Plugin to use the **StartTLS** extension if the LDAP Directory service will support it and fallback to no TLS if this extension is not available.

starttlsforce - Does the same as the ‘starttls‘ setting does but reports error on fallback.

Working configuration examples:

bacula-dir.conf - Console resource configuration for BPAM LDAP Plugin with OpenLDAP authentication example.

Console {

Name = "bacula_ldap_console"

Password = "xxx"

# New directive (on a single line)

Authentication Plugin = "ldap:url=ldap://ldapsrv/ binddn=cn=root,dc=bacula,dc=com bindpass=secret query=dc=bacula,dc=com/(cn=(cn=%u) starttls"

...

}

bacula-dir.conf - Console resource configuration for BPAM LDAP Plugin with Active Directory authentication example.

Console {

Name = "bacula_ad_console"

Password = "xxx"

# New directive (on a single line)

Authentication Plugin ="ldap:url=ldaps://ldapsrv/ binddn=cn=bacula,ou=Users,dc=bacula,dc=com bindpass=secret query=dc=bacula,dc=com/(&(objectCategory=person)(objectClass=user)(sAMAccountName=%u))"

...

In Bacula Enterprise 12.6.0, File Daemon Plugins will generate Objects recorded in the Catalog to easily find and restore plugin Objects such as databases or virtual machines. The Objects are easy to list, count and manage. Objects can be restored without knowing any details about the Job, the Client, or the Fileset. Each plugin can create multiple Objects of the specific type.

As of now, the following plugins support Object Management:

PostgreSQL (in dump mode)

MySQL (in dump mode)

MSSQL VDI

vSphere

VSS Hyper-V

Xenserver

Proxmox

*list objects

Automatically selected Catalog: MyCatalog

Using Catalog "MyCatalog

objectid |

jobid |

objectcategory |

objecttype |

objectname |

|---|---|---|---|---|

1 |

1 |

Database |

PostgreSQL |

postgres |

2 |

1 |

Database |

PostgreSQL |

template1 |

3 |

1 |

Virtual Machine |

VMWare |

VM_1 |

*list objects category="Database"

Automatically selected Catalog: MyCatalog

Using Catalog "MyCatalog"

objectid |

jobid |

objectcategory |

objecttype |

objectname |

|---|---|---|---|---|

2 |

1 |

Database |

PostgreSQL |

template1 |

4 |

1 |

Database |

PostgreSQL |

database1 |

Objects can be easily deleted:

*delete

In general it is not a good idea to delete either a

Pool or a Volume since they may contain data.

You have the following choices:

1: volume

2: pool

3: jobid

4: snapshot

5: client

6: tag

7: object

Choose catalog item to delete (1-7): 7

Enter ObjectId to delete: 1

It is also possible to delete specified groups of objects:

*delete object objectid=2,3-7,9

There is a new item in the restore menu to restore Objects easily:

*restore objectid=2

OR

*restore

Automatically selected Catalog: MyCatalog

Using Catalog "MyCatalog"

First you select one or more JobIds that contain files

to be restored. You will be presented several methods

of specifying the JobIds. Then you will be allowed to

select which files from those JobIds are to be restored.

To select the JobIds, you have the following choices:

1: List last 20 Jobs run

2: List Jobs where a given File is saved

...

11: Enter a list of directories to restore for found JobIds

12: Select full restore to a specified Job date

13: Select object to restore

<-----------

14: Cancel

Select item: (1-14): 13

List of the Object Types:

1: PostgreSQL Database

2: VMWare Virtual Machine

Select item: (1-2): 1

Automatically selected : database1

Objects available:

objectid |

objectname |

client |

objectsource |

starttime |

objectsize |

|---|---|---|---|---|---|

2 |

template1 |

127.0.0.1-fd |

PostgreSQL Plugin |

2020-10-15 13:10:15 |

10240 |

4 |

database1 |

127.0.0.1-fd |

PostgreSQL Plugin |

2020-10-15 13:10:17 |

10240 |

Enter ID of Object to be restored: 2

Automatically selected Client: 127.0.0.1-fd

Bootstrap records written to /opt/bacula/working/127.0.0.1-dir.restore.1.bsr

The Job will require the following (*=>InChanger):

Volume(s)

Storage(s)

SD Device(s)

===========================================================================

TestVolume001 File1

Volumes marked with "*" are in the Autochanger.

1 file selected to be restored.

Using Catalog "MyCatalog"

Run Restore job

JobName:

RestoreFiles

...

Catalog:

MyCatalog

Priority:

10

Plugin Options: *None*

OK to run? (yes/mod/no): yes

Job queued. JobId=5

Objects can easily be managed from various BWeb Management Suite screens. (See objectbweb).

Support for MariaDB 10 in the MySQL Plugin’s Binary Backup Mode

Starting with MariaDB 10, the MariaDB team has introduced new backup tools based on the Percona backup tools. The MySQL FileDaemon Plugin now can determine dynamically which backup tool to use during a binary backup.

Tag Support

It is now possible to assign custom Tags to various catalog records in Bacula such as:

Volume

Client

Job

*tag Automatically selected Catalog: MyCatalog Using Catalog "MyCatalog" Available Tag operations: 1: Add 2: Delete 3: List Select Tag operation (1-3): 1 Available Tag target: 1: Client 2: Job 3: Volume Select Tag target (1-3): 1 The defined Client resources are: 1: 127.0.0.1-fd 2: test1-fd 3: test2-fd 4: test-rst-fd 5: test-bkp-fd Select Client (File daemon) resource (1-5): 1 Enter the Tag value: test1 1000 Tag added *tag add client=127.0.0.1-fd name=#important" 1000 Tag added *tag list client

tag |

clientid |

client |

|---|---|---|

#tagviamenu3 |

1 |

127.0.0.1-fd |

test1 |

1 |

127.0.0.1-fd |

#tagviamenu2 |

1 |

127.0.0.1-fd |

#tagviamenu1 |

1 |

127.0.0.1-fd |

#important |

1 |

127.0.0.1-fd |

*tag list client name=#important

clientid |

client |

|---|---|

1 |

127.0.0.1-fd |

It is possible to assign Tags to a Job record with the new ’Tag’ directive in a Job resource.

Job {

Name = backup

...

Tag = "#important", "#production"

}

tag |

jobid |

job |

|---|---|---|

#important |

2 |

backup |

#production |

2 |

backup |

The Tags are also accessible from various BWeb Management Suite screens. (See tagbweb).

Support for SHA256 and SHA512 in FileSet

The support for strong signature algorithms SHA256 and SHA512 has been added to Verify Jobs. It is now possible to check if data generated by a Job that uses an SHA256 or SHA512 signature is valid.

FileSet {

Options {

Signature = SHA512

Verify = pins3

}

File = /etc

}

In the FileSet Verify option directive, the following code has been added:

3 - for SHA512

2 - for SHA256

Support for MySQL Cluster Bacula Catalog

The Bacula Director Catalog service can now use MySQL in cluster mode

with the replication option sql_require_primary_key=ON. The support

is dynamically activated.

Support of Windows Operating System in bee_installation_manager

Bacula Enterprise can now be installed in a very simple and straight

forward way with the bee_installation_manager procedure on Windows

operating system. The program will use the Customer Download Area

information to help users to install Bacula Enterprise in just a few

seconds.

The procedure to install Bacula Enterprise on Windows can now be automatised with the following procedure:

# wget https://baculasystems.com/ml/bee_installation_manager.exe

# bee_installation_manager.exe

Please see the Bacula Enterprise Installation Manager whitepaper for more information.

Windows Installer Silent Mode Enhancement

The following command line options can be used to control the regular Bacula installer values in silent mode:

-ConfigClientName

-ConfigClientPort

-ConfigClientPassword

-ConfigClientMaxJobs

-ConfigClientInstallService

-ConfigClientStartService

-ConfigStorageName

-ConfigStoragePort

-ConfigStorageMaxJobs

-ConfigStoragePassword

-ConfigStorageInstallService

-ConfigStorageStartService

-ConfigDirectorName

-ConfigDirectorPort

-ConfigDirectorMaxJobs

-ConfigDirectorPassword

-ConfigDirectorDB

-ConfigDirectorInstallService

-ConfigDirectorStartService

-ConfigMonitorName

-ConfigMonitorPassword

The following options control the installed components:

-ComponentFile

-ComponentStorage

-ComponentTextConsole

-ComponentBatConsole

-ComponentTrayMonitor

-ComponentAllDrivesPlugin

-ComponentWinBMRPlugin

-ComponentCDPPlugin

Example

bacula-enterprise-win64-12.4.0.exe /S -ComponentFile -ConfigClientName

foo -ConfigClientPassword bar

Will install only file deamon with bacula-fd.conf configured.

bacula-enterprise-win64-12.4.0.exe /S

-ComponentStorage

-ComponentFile-ConfigClientName foo -ConfigClientPassword bar

-ConfigStorageName foo2 -ConfigStoragePassword bar2

Will install the Storage Deamon plus File Deamon with bacula-sd.conf and bacula-fd.conf configured.

New Global Endpoint Deduplication Storage System (BETA)

A new Dedup engine comes with a new storage format for the data on disk. The new format keeps the data of a backup grouped together. It significantly increases both the speed of the ibackup and restore operations. The new dedup vacuum command integrates a procedure that compacts the data that are scattered in order to clear large and contiguous areas for the new data and also reduce fragmentation. Please contact the Bacula Systems Customer Success team if you are interested to join the beta program.

Bacula Enterprise 12.4.1

New Message Identification Format

We are starting to add unique message indentifiers to each message (other than debug and the Job report) that Bacula prints. At the current time only two files in the Storage Daemon have these message identifiers and over time with subsequent releases we will modify all messages.

The message identifier will be kept unique for each message and once assigned to a message it will not change even if the text of the message changes. This means that the message identifier will be the same no matter what language the text is displayed in, and more importantly, it will allow us to make listing of the messages with in some cases, additional explanation or instructions on how to correct the problem. All this will take several years since it is a lot of work and requires some new programs that are not yet written to manage these message identifiers.

The format of the message identifier is:

[AAnnnn]

where A is an upper case character and nnnn is a four digit number, where the first character indicates the software component (daemon); the second letter indicates the severity, and the number is unique for a given component and severity.

For example:

[SF0001]

The first character representing the component at the current time one of the following:

S Storage daemon

D Director

F File daemon

The second character representing the severity or level can be:

A Abort

F Fatal

E Errop

W Warning

S Security

I Info

D Debug

O OK (i.e. operation completed normally)

So in the example above [SF0001] indicates it is a message id, because of the brackets and because it is at the beginning of the message, and that it was generated by the Storage daemon as a fatal error. As mentioned above it will take some time to implement these message ids everywhere, and over time we may add more component letters and more severity levels as needed.

GPFS ACL Support

The new Bacula Enterprise FileDaemon supports the GPFS filesystem specific ACL. The GPFS libraries must be installed in the standard location. To know if the GPFS support is available on your system, the following commands can be used.

*setdebug level=1 client=stretch-amd64-fd

Connecting to Client stretch-amd64-fd at stretch-amd64:9102

2000 OK setdebug=1 trace=0 hangup=0 blowup=0 options= tags=

*st client=stretch-amd64-fd

Connecting to Client stretch-amd64-fd at stretch-amd64:9102

stretch-amd64-fd Version: 12.4.0 (20 July 2020) x86_64-pc-linux-gnu-bacula-enterprise debian 9.11

Daemon started 21-Jul-20 14:42. Jobs: run=0 running=0.

Ulimits: nofile=1024 memlock=65536 status=ok

Heap: heap=135,168 smbytes=199,993 max_bytes=200,010 bufs=104 max_bufs=105

Sizes: boffset_t=8 size_t=8 debug=1 trace=0 mode=0,2010 bwlimit=0kB/s

Crypto: fips=no crypto=OpenSSL 1.0.2u 20 Dec 2019

APIs: GPFS

Plugin: bpipe-fd.so(2)

The APIs line will indicate if the /usr/lpp/mmfs/libgpfs.so was

loaded at the start of the Bacula FD service or not.

The standard ACL Support directive can be used to enable automatically the support for the GPFS ACL backup.

Bacula Enterprise 12.4

RHV Incremental Backup Support

The new Bacula Enterprise RHV Plugin supports Virtual Machine incremental backup.

Please see the RHV Plugin whitepaper for more information.

RHV Proxy Backup Support

The new Bacula Enterprise RHV Plugin can use a proxy to backup Virtual Machines.

Please see the RHV Plugin whitepaper for more information.

HDFS Hadoop Plugin

The Bacula Enterprise HDFS Plugin can save the objects stored in an HDFS cluster.

During a backup, the HDFS Hadoop Plugin will contact the Hadoop File System to generate a system snapshot and retrieve files one by one. During an incremental or a differential backup session, the Bacula File Daemon will read the differences between two Snapshots to determine which files should be backed up.

Please see the HDFS Plugin whitepaper for more information.

NDMP SMTAPE Incremental Support

Bacula Enterprise NDMP Plugin now supports the SMTAPE Incremental feature.

Please see the NDMP Plugin whitepaper for more information.

NDMP EMC Unity Global Endpoint Deduplication Support

The NDMP plugin has been enhanced to greatly increase the deduplication ratio of EMC DUMP images and TAR images.

When the NDMP system is identified as a EMC host or the format is TAR or DUMP and the target storage device supports the Bacula Global Endpoint Deduplication option, the NDMP data stream will be analyzed automatically. The following message will be displayed in the Job log.

JobId 1: EMCTAR analyzer for Global Endpoint Deduplication enabled

Please see the NDMP Plugin whitepaper for more information.

vSphere Virtual Machine Overwrite During Restore

The new Bacula Enteprise vSphere Plugin can now overwrite existing Virtual Machines during the restore process.

Please see the vSphere Plugin whitepaper for more information.

BWeb Management Console New Features

Remote Client Installation

BWeb Management Console can now deploy Bacula Enterprise File Daemons to remote client machines.

Event and Auditing

The Director daemon can now record events such as:

Console connection/disconnection

Daemon startup/shutdown

Command execution

…

The events may be stored in a new catalog table, to disk, or sent via syslog.

Messages {

Name = Standard

catalog = all, events

append = /opt/bacula/working/bacula.log = all, !skipped

append = /opt/bacula/working/audit.log = events, !events.bweb

}

Messages {

Name = Daemon

catalog = all, events

append = /opt/bacula/working/bacula.log = all, !skipped

append = /opt/bacula/working/audit.log = events, !events.bweb

append = /opt/bacula/working/bweb.log = events.bweb

}

The new message category “events” is not included in the default configuration files by default.

It is possible to filter out some events using “!events.” form. It is possible to specify 10 custom events per Messages resource.

All event types are recorded by default.

When stored in the catalog, the events can be listed with the “list events” command.

* list events [type=<str> | limit=<int> | order=<asc|desc> | days=<int> |

start=<time-specification> | end=<time-specification>]

time |

type |

source |

event |

|---|---|---|---|

2020-04-24 17:04:07 |

daemon |

Daemon |

Director startup |

2020-04-24 17:04:12 |

connection |

Console |

Connection from 127.0.0.1:8101 |

2020-04-24 17:04:20 |

command |

Console |

purge jobid=1 |

The .events command can be used to record an external event. The

source recorded will be recorded as “**source**”. The events type can

have a custom name.

* .events type=bweb source=joe text="User login"

The Director EventsRetention directive can be used to control the

pruning of the Event catalog table. Click

here for more information.

Misc 2

Bacula Enteprise Installation Manager

Bacula Enterprise can now be installed in a very simple and straight

forward way with the bee_installation_manager procedure. The program

will use the Customer Download Area information to help users to install

Bacula Enterprise in just a few seconds.

The procedure to install Bacula Enterprise on RHEL, Debian and Ubuntu can now be automatised with the following procedure:

# wget https://www.baculasystems.com/ml/bee_installation_manager

# chmod +x ./bee_installation_manager

# ./bee_installation_manager

Please see the Bacula Enterprise Installation Manager whitepaper for more information.

VMware PERL SDK Replacement

The VMware PERL SDK is no longer required to configure VMware backup

jobs with the vsphere plugin. To use the vSphere - BWeb integration,

all that is now neccessary to install is the

bacula-enterprise-vsphere plugin on the BWeb server.

SAP HANA TOOLOPTION

The TOOLOPTION parameter can be used to customize some backint parameter at runtime. The following job options can be modified:

job

pool

level

hdbsql -i 00 -u SYSTEM -p X -d SYSTEMDB "BACKUP DATA INCREMENTAL USING BACKINT (’Inc2’) TOOLOPTION ’level=full’"

QT5 on Windows

Microsoft Windows graphical programs are now using QT5.

Bacula Enterprise 12.2

Kubernetes Plugin

The Bacula Enterprise Kubernetes Plugin can save all the important Kubernetes Resources which build applications or services. This includes the following namespaced objects:

Config Map

Daemon Set

Deployment

Endpoint

Limit Range

Pod

Persistent Volume Claim

Pod Template

Replica Set

Replication Controller

Resource Quota

Secret

Service

Service Account

Stateful Set

PVC Data Archive

and non namespaced objects:

Namespace

Persistent Volume

All namespaced objects which belongs to a particular namespace are grouped together for easy browsing and recovery of backup data.

Please see the Kubernetes Plugin whitepaper for more information.

RHV Single Item Restore Support

BWeb Management Suite and a console tool named “mount-vm” allow the restore of single files from Red Hat Virtualization VM backups.

Please see the RHV Plugin and the Single Item Restore whitepaper for more information.

FIPS Support

The Federal Information Processing Standards (FIPS) define U.S. and Canadian Government security and interoperability requirements for cryptographic modules. It describes the approved security functions for symmetric and asymmetric key encryption, message authentication, and hashing.

For more information about the FIPS 140-2 standard and its validation program, see the National Institute of Standards and Technology (NIST) and the Communications Security Establishment Canada (CSEC) Cryptographic Module Validation Program at http://csrc.nist.gov/groups/STM/cmvp.

Bacula Enterprise adds FIPS compliance through the Bacula Enterprise Cryptographic Module “OpenSSL-FIPS” [2]_ that was certified on a number of platform and by various vendors including RHEL [3]_. Bacula Enterprise daemons and tools can now display information about the current FIPS status and require a FIPS-compliant crypto library to be used on all Bacula compoments (for example, the MD5 hash function is not included in FIPS and an error will be reported if it is in use).

On RHEL 8, a specific procedure is required to activate the FIPS mode

with the fips-mode-setup tool. More information can be found on

https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/8/html/security_hardening/assembly_installing-a-rhel-8-system-with-fips-mode-enabled_security-hardening

To activate the FIPS requirements in a Bacula component (Console, Client, Director, Storage), the directive must be set.

root@localhost:~/ head -3 /opt/bacula/etc/bacula-fd.conf

FileDaemon {

Name = localhost-fd

FIPS Require = yes

}

root@localhost:~/ head -3 /opt/bacula/etc/bacula-dir.conf

Director {

Name = localhost-dir

FIPS Require = yes

}

root@localhost:~/ head -3 /opt/bacula/etc/bacula-sd.conf

Storage {

Name = localhost-sd

FIPS Require = yes

}

root@localhost:~/ head -3 /opt/bacula/etc/bconsole.conf

Director {

Name = localhost-sd

FIPS Require = yes

}

The FIPS status is displayed in the “Crypto” section of the header from the command for each daemon.

root@localhost:~/ bconsole

*status dir

rhel7-64-dir Version: 12.1.0 (03 July 2019) x86_64-redhat-linux-gnu-bacula-enterprise redhat Enterprise

Daemon started 02-Jul-19 11:54, conf reloaded 02-Jul-2019 11:54:11

Jobs: run=0, running=0 mode=0,2010

Crypto: fips=yes crypto=OpenSSL 1.0.2k-fips 26 Jan 2017

The OpenSSL cryptographic module information is also displayed.

Amazon “Glacier” Support

The Bacula Enterprise S3 Cloud Storage can now automatically restore volumes stored on Amazon Glacier, allowing for more flexible tiered backup storage in the cloud.

Please see the Cloud S3 whitepaper for more information.

DB2 Plugin

The DB2 Plugin is designed to simplify the backup and restore operations of a DB2 database system. The plugin simplifies backup operations so that the backup administrator does not need to know about internals of DB2 backup techniques or write complex scripts. The DB2 Plugin supports Point In Time Recovery (PITR) techniques, and Incremental and Incremental Delta backup levels.

Please see the DB2 Plugin whitepaper for more information.

vSphere vApp Properties Support

The virtual machine description (OVF) is now initialized with all vApp properties.

<Property ovf:qualifiers="MinLen(1) MaxLen(64)" ovf:userConfigurable="true"

ovf:value="thisisatest.net" ovf:type="string" ovf:key="vsm_hostname">

</Property>

Please see the vSphere Plugin whitepaper for more information.

NDMP Global Endpoint Deduplication Enhancement

The NDMP plugin has been enhanced to greatly increase the deduplication ratio of NetApp NDMP dump images and TAR images.

When the NDMP system is identified as a NetApp host or the format is TAR and the target storage device supports the Bacula Global Endpoint Deduplication option, the NDMP data stream will be analyzed automatically. The following message will be displayed in the Job log.

JobId 1: NetApp Dump analyzer for Global Endpoint Deduplication enabled

or

JobId 1: TAR analyzer for Global Endpoint Deduplication enabled

Please see the NDMP Plugin whitepaper for more information.

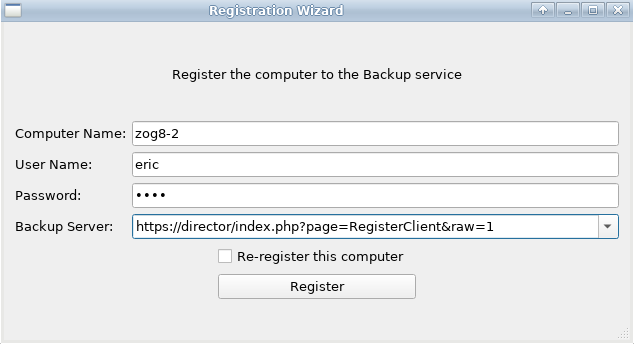

BWeb Management Suite

Client Registration Module

The BWeb management suite simplifies the configuration and the deployment of new clients with QR codes (for Android systems), and the “Registration Wizard”.

Restricted Console Wizard

The updated BWeb Console Wizard simplifies the configuration of restricted Consoles.

Android Phone Support Enhancements

The support for Android Phones has been improved. It is now possible to:

configure the Bacula FileDaemon with a QR code generated from BWeb Management Suite;

start backup jobs from the main interface;

restore files from the main interface;

Volume Retention Enhancements

The Pool/Volume parameter Volume Retention can now be disabled to

never prune a volume based on the Volume Retention time. When

Volume Retention is disabled, only the Job Retention time will

be used to prune jobs.

Pool {

Volume Retention = 0

...

}

New BCloud Features

Add support for the

Connect To Directorfeature (for clients behind NAT).

Global Endpoint Deduplication Changes

The Global Endpoint Deduplication feature was re-organized to support multiple Deduplication engines in a single Storage Daemon instance. The Deduplication engine can now be configured via a new Dedup configuration resource.

Please see the Global Endpoint Deduplication whitepaper for more information.

Bacula Enterprise 12.0.2

The Bacula Docker Plugin can now handle external Docker volumes.

The Docker Plugin whitepaper provides more detailed information.

Bacula Enterprise 12.0

Docker Plugin

Containers are very light system level virtualization with less overhead.

Docker containers rely on sophisticated file system level data abstraction with a number of read-only images to create templates used for container initialization.

The Bacula Enterprise Docker Plugin will save the full container image including all read-only and writable layers into a single image archive.

It is not necessary to install a Bacula File daemon in each container, so each container can be backed up from a common image repository.

The Bacula Docker Plugin will contact the Docker service to read and save the contents of any system image or container image using snapshots (default behavior) and dump them using the Docker API.

The Docker Plugin whitepaper provides more detailed information.

Docker Client Package

The File Daemon package can now be installed via a Docker image.

Sybase ASE Plugin

The Sybase ASE Plugin is designed to simplify the backup and restore operations of a Sybase Adaptive Server Enterprise. The backup administrator does not need to know about internals of Sybase ASE backup techniques or write complex scripts. The Sybase ASE Plugin supports Point In Time Recovery (PITR) with Sybase Backup Server Archive API backup and restore techniques.

The Plugin is able to do incremental and differential backups of the database at block level. This plugin is available on 32-bit and 64-bit Linux platforms supported by Sybase, and supports Sybase ASE 12.5, 15.5, 15.7 and 16.0.

Please see the Sybase ASE Plugin whitepaper for more information.

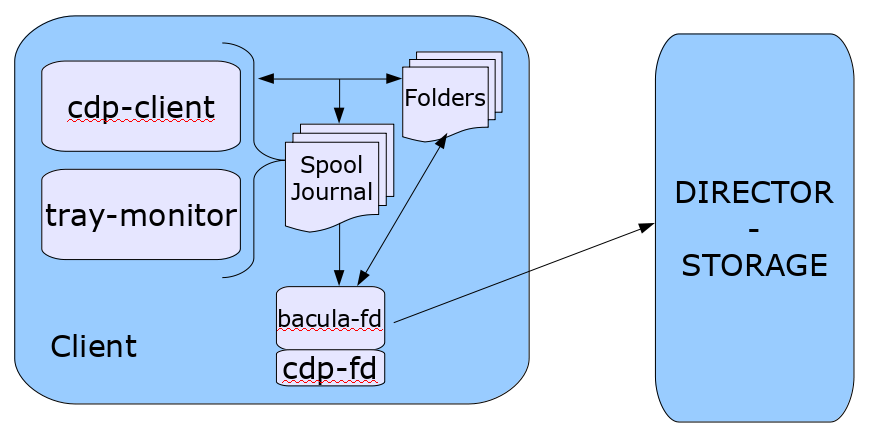

Continuous Data Protection Plugin

Continuous Data Protection (CDP), also called continuous backup or real-time backup, refers to backup of Client data by automatically saving a copy of every change made to that data, essentially capturing every version of the data that the user saves. It allows the user or administrator to restore data to any point in time.

The CDP feature is composed of two components: An application

(cdp-client or tray-monitor) that will monitor a set of

directories configured by the user, and a Bacula FileDaemon plugin

responsible to secure the data using Bacula infrastructure.

The user application (cdp-client or tray-monitor) is responsible

for monitoring files and directories. When a modification is detected,

the new data is copied into a spool directory. At a regular interval,

a Bacula backup job will contact the FileDaemon and will save all

the files archived by the cdp-client. The locally copied data can be

restored at any time without a network connection to the Director.

See the CDP (Continious Data Protection) chapter blb:cdp for more information.

Automatic TLS Encryption

Starting with 12.0, all daemons and consoles are now using TLS automatically for all network communications. It is no longer required to setup TLS keys in advance. It is possible to turn off automatic TLS PSK encryption using the TLS PSK Enable directive.

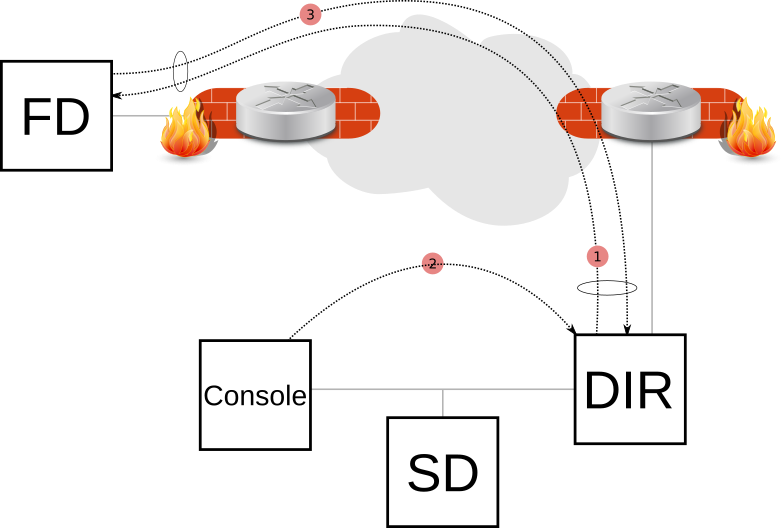

Client Behind NAT Support with the Directive

A Client can now initiate a connection to the Director (permanently or scheduled) to allow the Director to communicate to the Client when a new Job is started or a bconsole command such as status client or estimate is issued.

This new network configuration option is particularly useful for Clients that are not directly reachable by the Director.

# cat /opt/bacula/etc/bacula-fd.conf

Director {

Name = bac-dir

Password = aigh3wu7oothieb4geeph3noo # Password used to connect

# New directives

Address = bac-dir.mycompany.com # Director address to connect

Connect To Director = yes # FD will call the Director

}

# cat /opt/bacula/etc/bacula-dir.conf

Client {

Name = bac-fd

Password = aigh3wu7oothieb4geeph3noo

# New directive

Allow FD Connections = yes

}

It is possible to schedule the Client connection at certain periods of the day:

# cat /opt/bacula/etc/bacula-fd.conf Director

Director {

Name = bac-dir

Password = aigh3wu7oothieb4geeph3noo # Password used to connect

# New directives

Address = bac-dir.mycompany.com # Director address to connect

Connect To Director = yes # FD will call the Director

Schedule = WorkingHours

}

Schedule {

Name = WorkingHours

# Connect the Director between 12:00 and 14:00

Connect = MaxConnectTime=2h on mon-fri at 12:00

}

Note that in the current version, if the File Daemon is started after 12:00, the next connection to the Director will occur at 12:00 the next day.

A Job can be scheduled in the Director around 12:00, and if the Client is connected, the Job will be executed as if the Client was reachable from the Director.

Android Phone Support

The FileDaemon and the Tray Monitor are now available on the Android platform.

Proxmox Clustering Features

With BWeb Management Console 12.0, it is now possible to analyze a Proxmox cluster configuration and dynamically adjust the Bacula configuration in the following cases:

Virtual machine added to the cluster

Virtual machine removed from the cluster

Virtual machine migrated between cluster nodes

The Proxmox whitepaper provides more information.

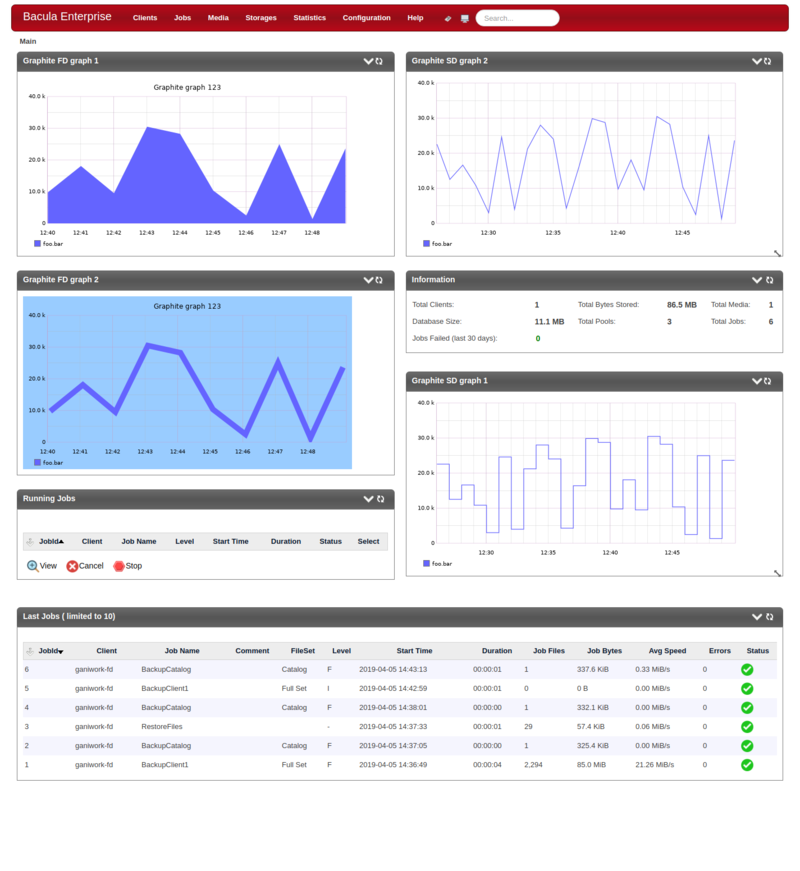

BWeb Management Console Dashboards

With BWeb Management Console 12.0, it is now possible to customize the size and the position of all boxes displayed in the interface. The Page Composer page can be used to graphically design pages and create dashboards with a library of predefined widgets or with Graphite-provided graphics.

Miscellaneous

Global Control Directive

The Director Autoprune directive can now globally control the Autoprune feature. This directive will take precedence over Pool or Client Autoprune directives.

Director {

Name = mydir-dir

...

AutoPrune = no

}

vSphere Plugin ESXi 6.7 Support

The new vSphere Plugin is now using VDDK 6.7.1 and should have a more efficient backup process with empty or unallocated blocks.

New Documentation

The documentation was improved to automatically handle external references in PDF as well as in HTML.

Linux BMR UEFI Support

The Linux BMR version 2.2.1 now supports the UEFI boot system. Note that

it is necessary to back up the related file system, usually mounted at

/boot/efi and formatted with a MS-DOS or vfat file system.

MSSQL Plugin Enhancements

The Bacula Enterprise Microsoft SQL Server Plugin (MSSQL) has been improved to handle database recovery models more precisely. The target_backup_recovery_models parameter allows to enable database backups depending on their recovery model. The simple_recovery_models_incremental_action controls the plugin behavior when an incompatible incremental backup is requested on a simple recovery model database: It is possible to upgrade to full backup (default), to ignore the database and emit a job warning (ignore_with_error), or to ignore the database and emit a “skipped” message (ignore). Please refer to the specific plugin documentation for more information.

MySQL Percona Enhancements

The new MySQL Percona Plugin was optimized and does not require large temporary files anymore.

Dynamic Client Address Directive

It is now possible to use a script to determine the address of a Client when dynamic DNS option is not a viable solution:

Client {

Name = my-fd

...

Address = "|/opt/bacula/bin/compute-ip my-fd"

}

The command used to generate the address should return one single line with a valid address and end with the exit code 0. An example would be

Address = "|echo 127.0.0.1"

This option might be useful in some complex cluster environments.

Bacula Enterprise 10.2.1

New Prune Command Option

The prune jobs all command will query the catalog to find all combinations of Client/Pool, and will run the pruning algorithm on each of them. At the end, all files and jobs not needed for restore that have passed the relevant retention times will be pruned.

The prune command prune jobs all yes can be scheduled in a RunScript to prune the catalog once per day for example. All Clients and Pools will be analyzed automatically.

Job {

...

RunScript {

Console = "prune jobs all yes"

RunsWhen = Before

failjobonerror = no

runsonclient = no

}

}

Bacula Enterprise 10.2

Bacula Daemon Real-Time Statistics Monitoring

All daemons can now collect internal performance statistics periodically

and provide mechanisms to store the values to a CSV file or to send

the values to a Graphite daemon via the network. Graphite is an

enterprise-ready monitoring tool (https://graphiteapp.org).

For more information see the section about Daemon Real-Time Statistics Monitoring in the core documentation for the Bacula Director.

Red Hat Virtualization System Plugin

RHV is an open, software-defined platform that virtualizes Linux and Microsoft Windows workloads. Built on RHEL and KVM, it features management tools that virtualize resources, processes, and applications, providing a stable foundation for a cloud-native and containerized future.

The Red Hat Virtualization (RHV) Plugin provides virtual machine bare metal recovery for the Red Hat Virtualization system. This plugin provides image level full backup and recovery with a full set of options to select different backup sets and to personalize the restore operations.

The Red Hat Virtualization (RHV) whitepaper is provides more information.

New Cloud Storage Drivers

The different cloud drivers are now distributed in separated packages. Accordingly, an upgrade from a previous version may need some manual interaction.

bacula-enterprise-cloud-storage-azure

bacula-enterprise-cloud-storage-google

bacula-enterprise-cloud-storage-oracle

bacula-enterprise-cloud-storage-s3

bacula-enterprise-cloud-storage-common

Google Cloud Driver

Support for the Google Cloud Storage has been added in version 10.2. The behavior is identical to the Amazon S3 Cloud Storage.

See the Google Cloud Storage whitepaper for more information.

Oracle Cloud Driver

Support for the Oracle S3 Cloud Storage has been added in version 10.2. The behavior is identical to the Amazon S3 Cloud Storage.

See the Cloud Storage whitepaper for more information.

MySQL Percona Plugin Enhancements

Making the databases consistent for restore is called Prepare in the