High Availability: Clustering

CommunityEnterpriseWhen clustering services, multiple servers work together as a single system to improve reliability and/or performance. A failover cluster service coordinates tasks, so that if one server fails, another takes over its workload. This is a fundamental technique for providing high availability for Bacula services.

Using spare hardware, Bacula can be integrated with standard open-source Linux clustering solutions such as Heartbeat or Pacemaker.

In the event of a failure, resource managers such as Pacemaker automatically initiates recovery and make sure your application is available from one of the remaining machines in the cluster. Pacemaker is the new version of Heartbeat, it permits handling very complex cluster setups. With Bacula, this level of complexity is not needed so a simple Primary/Slave configuration is recommended for managing the Bacula Director and the user interface layer (BWeb).

Pacemaker

Pacemaker is a cluster resource manager of the Open Cluster Framework (OCF), which is a set of standards for cluster components widely used in Linux environments, such as the resource agent standard.

A resource agent is an abstraction that provides service-level awareness to the cluster. It contains the logic required to start, stop, or check the health of a particular service.

Pacemaker coordinates the configuration, startup, monitoring, and recovery of interrelated services across cluster hosts. It supports a number of service types, including OCF resource agents and systemd unit files. Pacemaker supports advanced service configurations such as groups of dependent resources, services that should be active on multiple machines at the same time, resources that can switch between two different roles, and containerized services.

Pacemaker includes features like:

Detection and recovery of host-level and application-level failures

Support for practically any redundancy configuration

Configurable strategies for dealing with quorum loss

Support for ordering initiations and terminations of different applications, without requiring the applications to run on the same host

Support for applications that must or must not run on the same host

Supports for applications that should be active on multiple hosts

Support for applications with dual roles (promoted and unpromoted)

Provably correct response to any failure or cluster state (cluster response to any condition can be tested offline before the condition exists)

Pacemaker typically works together with Corosync, which is the membership and messaging layer. It tracks all hosts in a cluster, including liveness and quorum, and ensures reliable communication and message delivery between nodes.

Another commonly used tool with Pacemaker is the Pacemaker/Corosync Configuration System (PCS).

PCS provides both a command-line tool (pcs) and the PCS Web UI for managing the complete

life cycle of multiple cluster components, including Pacemaker, Corosync and some others.

More information about this projects can be found in their project pages, managed by clusterlabs.org here: https://clusterlabs.org/projects/.

Resource Agents

The role of a resource agent is to abstract the service it provides and present a consistent view to the cluster, which allows the cluster to be agnostic about the resources it manages. The cluster does not need to understand how the resource works because it relies on the resource agent to simply issue start, stop, or monitor commands.

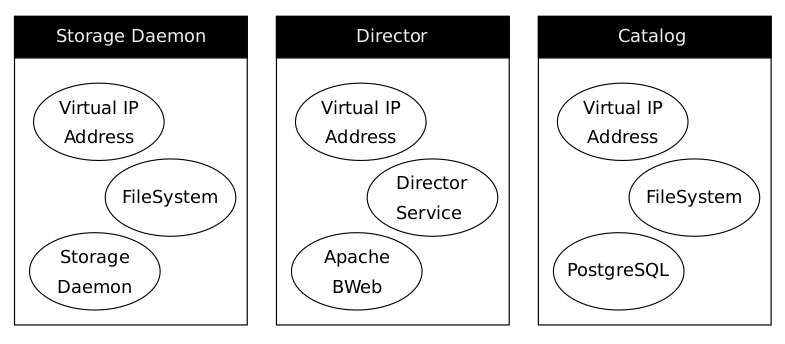

Resource agents are typically implemented as shell scripts, however they can also be written using any technology (such as C, Python or Perl). With Bacula, the following default resources can be used in the resource manager tool:

Pacemaker/Heartbeat service definition

Virtual IP Addresses

Bacula Director service

Bacula Storage services

Backup storage filesystems

PostgreSQL Catalog service

PostgreSQL data and configuration filesystem

As discussed in the architecture sections, the resources to choose for the cluster depend on the clustering technique that is chosen. All components can be included in the Pacemaker stack, but it is also possible to create a cluster based on Pacemaker for the Director only while using a different stack for PostgreSQL (such us Patroni/ETCD/HAProxy) and and separate mechanisms for Bacula storage groups for the storage services.

The range of possible designs is broad and the final choice depends on the requirements and how the cluster will be manage. These factors are influenced by the underlying physical structure, the virtualization stack, if any, as well as the system administrators preferred software.

When using cluster techniques, a very common way to ensure that you can move or restart a service elsewhere on your internal network without having to reconfigure all your clients is to use virtual IP addresses for all your components. Each Bacula component that requires high availability should be assigned its own virtual IP address. The resource manager (Heartbeat) ensures that only one primary node owns a given virtual IP at any time.

Because Bacula is not designed to automatically reconnect when a TCP connection drops, running jobs will fail if a resource is moved from one location to another. Make sure that Bacula is stopped before moving services between hosts.

An example of how to configure these elements is described here.

See also

Previous articles:

Next articles:

Go back to: High Availability.